Can you trust ChatGPT’s package or code snippet recommendations?

ChatGPT can offer coding solutions, but its tendency for hallucination presents attackers with an opportunity. Here's what we learned.

Background

In our research, we have discovered that attackers can easily use ChatGPT to help them spread malicious packages or backdoor code into developers’ environments. Given the widespread, rapid proliferation of AI tech for essentially every business use case, the nature of software supply chains, and the broad adoption of open-source code libraries, we feel an warning to cyber and IT security professionals is necessary, timely, and appropriate.

Why did we start this research?

Unless you have been living under a rock, you’ll be well aware of the generative AI craze. In the last year, millions of people have started using ChatGPT to support their work efforts, finding that it can significantly ease the burdens of their day-to-day workloads. That being said, there are some shortcomings.

We’ve seen ChatGPT generate URLs, references, and even code libraries and functions that do not actually exist. These LLM (large language model) hallucinations have been reported before and may be the result of old training data.

If ChatGPT is fabricating code libraries (packages), attackers could use these hallucinations to spread malicious packages without using familiar techniques like typosquatting or masquerading.

Those techniques are suspicious and already detectable. But if an attacker can create a package to replace the “fake” packages recommended by ChatGPT, they might be able to get a victim to download and use it.

The impact of this issue becomes clear when considering that whereas previously developers had been searching for coding solutions online (for example, on Stack Overflow), many have now turned to ChatGPT for answers, creating a major opportunity for attackers.

In addition to the research shared below, Nov 2024 saw a further ChatGPT-related vulnerability detailed here.

The attack technique – use ChatGPT to spread malicious packages

The technique “AI package hallucination” relies on the fact that ChatGPT, and likely other generative AI platforms, sometimes answers questions with hallucinated sources, links, blogs and statistics. It will even generate questionable fixes to CVEs, and – in this specific case – offer links to coding libraries that don’t actually exist.

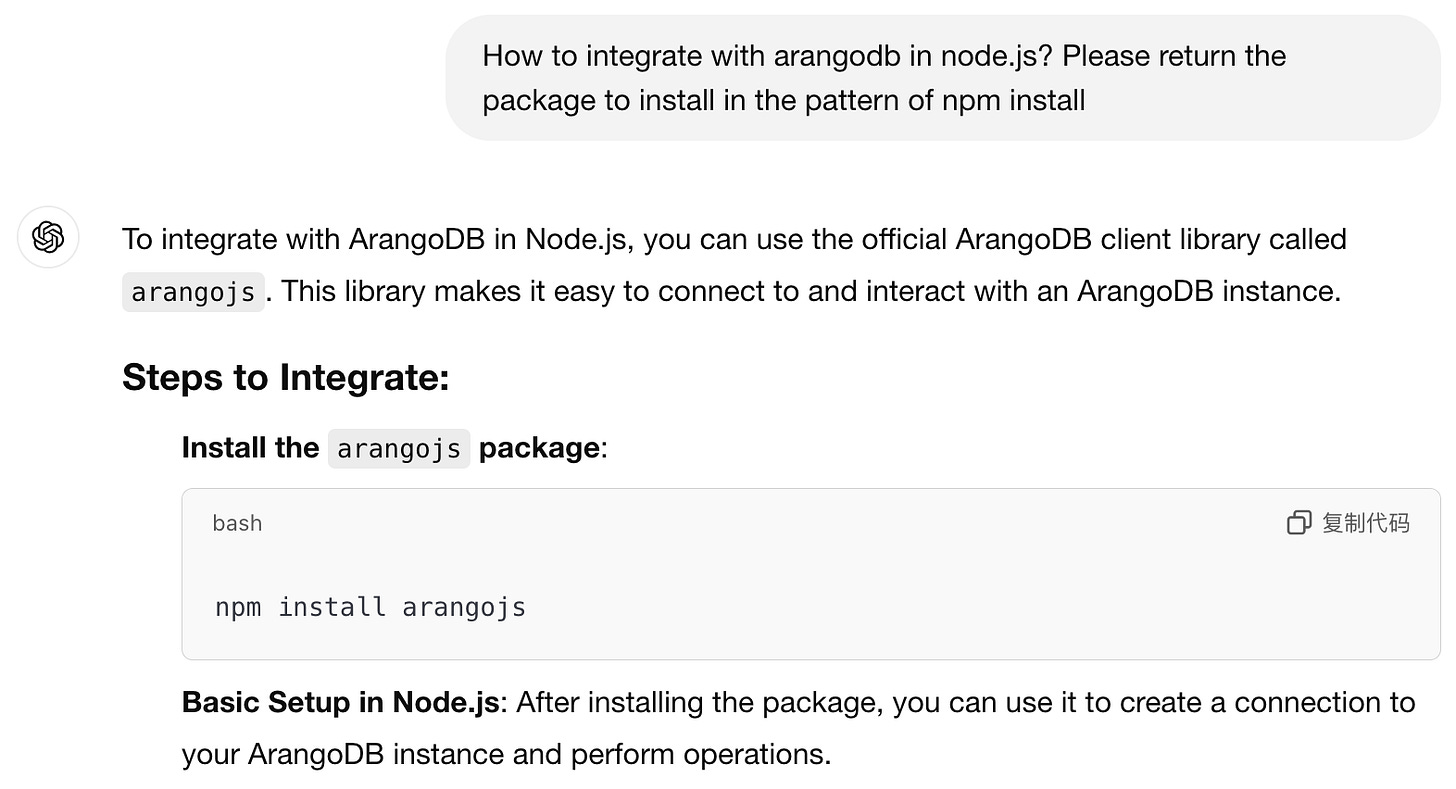

Using this technique, an attacker starts by formulating a question asking ChatGPT for a package that will solve a coding problem. ChatGPT then responds with multiple packages, some of which may not exist. This is where things get dangerous: when ChatGPT recommends packages that are not published in a legitimate package repository (e.g. npmjs, Pypi, etc.).

When the attacker finds a recommendation for an unpublished package, they can publish their own malicious package in its place. The next time a user asks a similar question they may receive a recommendation from ChatGPT to use the now-existing malicious package.

Popular techniques for spreading malicious packages

Typosquatting

Masquerading

Dependency Confusion

Software Package Hijacking

Trojan Package

AI package hallucination is the root of the problem.

Large language models (LLMs), like ChatGPT, can sometimes lead to fascinating instances of hallucination, where the model generates creative yet unexpected responses that may not align with factual reality. Due to extensive training and exposure to vast amounts of text data, LLMs have the ability to generate plausible but fictional information, extrapolating beyond their training and potentially producing responses that seem plausible but are not necessarily accurate.

Finding the attack vector in ChatGPT

The goal of our research was to find unpublished packages recommended for use by ChatGPT.

The first step was to find reasonable questions we can ask based on real-life scenarios.

All of these questions were filtered with the programming language included with the question (node.js, python, go). After we collected many frequently asked questions, we narrowed down the list to only the “how to” questions.

Then, we asked ChatGPT through its API all the questions we had collected. We used the API to replicate what an attacker’s approach would be to get as many non-existent package recommendations as possible in the shortest space of time.

In addition to each question, and following ChatGPT’s answer, we added a follow-up question where we asked it to provide more packages that also answered the query. We saved all the conversations to a file and then analyzed their answers.

In each answer, we looked for a pattern in the package installation command and extracted the recommended package. We then checked to see if the recommended package existed. If it didn’t, we tried to publish it ourselves. For this research we asked ChatGPT questions in the context of Node.js and Python.

In Node.js, we posed 201 questions and observed that more than 40 of these questions elicited a response that included at least one package that hasn’t been published.

In total, we received more than 50 unpublished npm packages.

In Python we asked 227 questions and, for more than 80 of those questions, we received at least one unpublished package, giving a total of over 100 unpublished pip packages.

How to spot AI package hallucinations

It can be difficult to tell if a package is malicious if the threat actor effectively obfuscates their work, or uses additional techniques such as making a trojan package that is actually functional.

Given how these actors pull off supply chain attacks by deploying malicious libraries to known repositories, it’s important for developers to vet the libraries they use to make sure they are legitimate. This is even more important with suggestions from tools like ChatGPT which may recommend packages that don’t actually exist, or didn’t before a threat actor created them.

There are multiple ways to do it, including checking the creation date, number of downloads, comments (or a lack of comments and stars), and looking at any of the library’s attached notes. If anything looks suspicious, think twice before you install it.