Let's talk about LLM Red Teaming

Since I shifted my focus from cloud security to LLM security in June this year, I have invested a lot of time in LLM vulnerability mining and analysis, and have been conducting extensive discussions with people in the industry. The most frequently asked questions can be roughly summarized as follows:

What are the security risks associated with LLMs/Agents?

What are the hazards of LLMs security risks? Will LLMs security risks be like ransomware and worms in the era of cloud security bring huge financial losses to enterprises?

How to effectively conduct LLMs security quantification assessment?

How to effectively fix known LLMs security vulnerabilities?

How to continuously monitor and measure the security risks of LLMs, and avoid them in advance before asset losses occur?

Thanks to all these discussions, I realized that a number of things that I take for granted LLM Red Teaming wise are 1) not widely spread ideas 2) apparently interesting.

So let's share the conversation more broadly!

LLM Red Teaming is part of LLM Evaluation

Firstly, let's analyze some basic noun concepts.

LLM Evaluation

LLM Evaluation refers to the process of assessing the performance, quality, safety, and behavior of Large Language Models (LLMs). This involves systematically testing models to determine how well they meet specific criteria or objectives, such as accuracy, fluency, relevance, ethical alignment, and robustness. Evaluation is critical for understanding an LLM's strengths and weaknesses, improving its performance, and ensuring it meets user needs and safety standards.

Here are some key dimensions of LLM Evaluation:

Performance Evaluation:

Measuring how accurately and effectively the model performs tasks, such as answering questions, summarizing text, or generating content.

Metrics often used:

Perplexity: A measure of how well the model predicts a sequence of words.

BLEU/ROUGE/Meteor: Metrics for text generation quality.

Exact Match (EM)/F1-Score: For QA tasks, assessing the overlap between generated and correct answers.

Human-Centric Evaluation:

Fluency: Is the language output natural and grammatically correct?

Relevance: Does the response directly address the user's query or context?

Coherence: Are the generated responses logical and well-structured?

Helpfulness: Does the response provide valuable and actionable information?

Safety and Ethical Evaluation:

Testing for harmful or unethical outputs, such as biased, offensive, or misleading content.

Scenarios include:

Bias analysis across sensitive attributes (e.g., gender, race).

Assessing responses to harmful or unethical prompts.

Measuring adherence to ethical principles.

Robustness Evaluation:

Testing the model's resilience to adversarial inputs, edge cases, or out-of-distribution data.

Example scenarios:

Handling incomplete or ambiguous prompts.

Resisting attempts to bypass safeguards (e.g., jailbreak prompts).

Alignment Evaluation:

Ensuring the model aligns with user intents and organizational or societal goals.

Example:

Evaluating if the model follows user instructions accurately.

Testing alignment with predefined ethical or safety guidelines.

Efficiency Evaluation:

Measuring computational efficiency, response times, and scalability.

Relevant for real-world applications where performance at scale matters.

By using robust and multi-faceted evaluation processes, developers can ensure that LLMs are safe, effective, and aligned with user and societal expectations.

LLM Red Teaming

LLM Red Teaming refers to the process of testing, probing, and evaluating Large Language Models (LLMs) to identify vulnerabilities, weaknesses, or unintended behaviors. This typically involves using adversarial methods to simulate potential real-world threats, ensuring the model behaves securely, ethically, and robustly under various scenarios.

It is similar to "red teaming" in cybersecurity, where a team of experts mimics attackers to test a system's defenses.

Here are some key goals of LLM Red Teaming:

Identify Security Vulnerabilities:

Test how the model handles malicious prompts or attempts to bypass safety mechanisms.

Look for exploits like jailbreaks, prompt injections, or data leakage.

Ensure Ethical Behavior:

Probe for bias, harmful content, or unethical responses.

Ensure the model adheres to ethical AI principles, such as fairness and inclusivity.

Evaluate Robustness:

Test edge cases and adversarial inputs to ensure the model can handle them gracefully.

Identify areas where the model is overly confident or misrepresents facts.

Guard Against Harmful Use Cases:

Simulate how bad actors might misuse the LLM for harmful purposes (e.g., generating disinformation, phishing, or malware).

Recommend mitigations to reduce the risk of misuse.

Improve Safety and Alignment:

Test whether the model follows intended instructions while avoiding harmful behavior.

Align the model more closely with user and societal expectations.

What's the difference of Evaluation and Red Teaming?

The difference between LLM Evaluation and LLM Red Teaming lies in their goals, methods, and focus areas, though both are essential for improving the safety, reliability, and performance of Large Language Models (LLMs).

Here's a detailed comparison.

Purpose and Goals

LLM Evaluation:

The primary goal is to measure and assess the model's overall performance across various dimensions like accuracy, relevance, fluency, safety, and alignment.

It focuses on quantifying the quality of the model, identifying strengths and weaknesses, and providing insights for iterative improvement.

Answers questions like:

"How good is the model at performing specific tasks?"

"How safe, aligned, or efficient is the model under normal use?"

LLM Red Teaming:

The primary goal is to test the model under adversarial or extreme conditions to identify vulnerabilities, edge cases, and failure points.

It focuses on challenging the model's defenses, safeguards, and robustness.

Answers questions like:

"How can the model be exploited or abused?"

"What are the worst-case scenarios or harmful outputs the model might produce?"

Methods and Techniques

LLM Evaluation:

Uses standardized benchmarks (e.g., datasets for summarization, QA, or translation tasks) to measure performance.

Involves human evaluation for subjective aspects like fluency, relevance, and helpfulness.

Automated metrics (e.g., BLEU, ROUGE, perplexity) and human ratings are commonly used.

Focuses on typical use cases and regular interactions.

LLM Red Teaming:

Uses adversarial methods to probe for weaknesses, such as:

Crafting malicious or adversarial prompts (e.g., jailbreaks, prompt injections).

Testing with edge cases, ambiguous queries, or adversarial inputs.

Simulating malicious use cases (e.g., attempts to generate harmful or illegal content).

Focuses on worst-case scenarios, exploring how the model behaves under stress or in malicious contexts.

Scope of Application

LLM Evaluation:

Broad and general-purpose.

Covers typical user interactions, task-specific capabilities, and overall quality.

Seeks to measure performance across a wide range of metrics: accuracy, robustness, fluency, safety, etc.

LLM Red Teaming:

Narrow and adversarial.

Focuses specifically on testing weaknesses, such as safety, bias, robustness, and ethical failures.

Concentrates on stress-testing and risk discovery rather than general performance.

Focus Areas

Outputs

LLM Evaluation:

Results in quantitative metrics (e.g., accuracy scores, bias measures, human ratings).

Provides a holistic view of the model's performance, helping guide iterative development and improvements.

LLM Red Teaming:

Results in qualitative insights (e.g., vulnerabilities, weaknesses, failure modes).

Provides a risk assessment to inform safety mitigations and safeguard improvements.

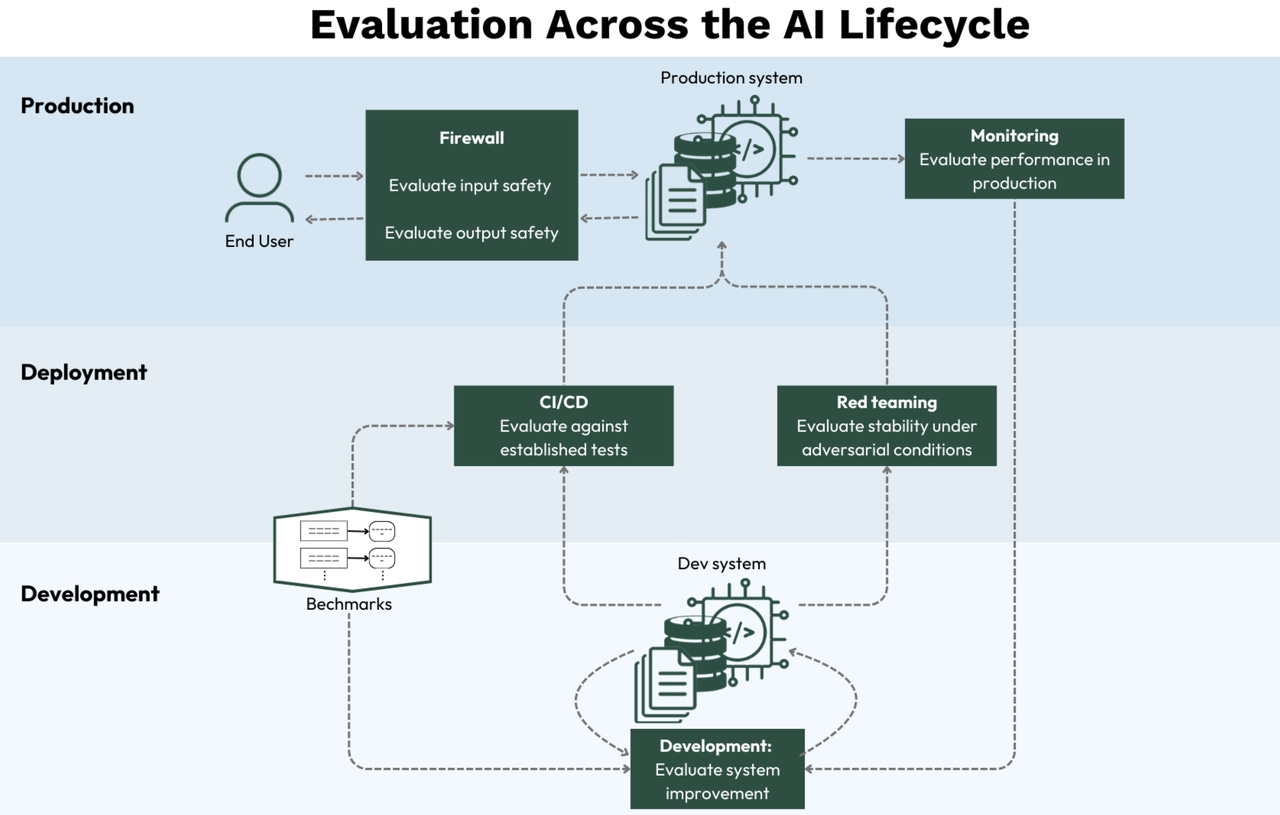

Timing in the Development Cycle

LLM Evaluation:

Performed regularly throughout the development cycle.

Used to monitor improvements, benchmark performance, and ensure the model meets general expectations.

LLM Red Teaming:

Typically performed during later stages of development or deployment as a final "stress test."

Used to uncover risks that may not emerge during standard evaluation.

LLM Red Teaming is part of LLM Evaluation

In short:

LLM Evaluation focuses on how well the model works under normal conditions.

LLM Red Teaming focuses on how the model breaks under adversarial or malicious conditions.

Both are complementary and necessary for building LLMs that are high-quality, safe, and robust.

The challenges for LLM Red Teaming

Challenge 1: LLM Red Teaming metrics are hard to make accurate and reliable

The performance of most discriminative models can be quantified by statistical metrics (like BLEU and ROUGE scores) that measure how closely the model’s decisions align with what is categorically correct.

But if ChatGPT said, “Sup, bitch?” is that actually bad? Perhaps ChatGPT should be aware that certain youthful folk can find derogatory salutations to be a tasteful form of an endearment.

Nowadays, most LLM Red Teaming metrics nowadays are LLM-Based-Judge, which means using LLMs to evaluate LLM outputs. They are used on 3 main tasks:

Scoring a model generation, on a provided scale, to assess a property of the text (fluency, toxicity, coherence, persuasiveness, etc).

Pairwise scoring: comparing a pair model outputs to pick the best text with respect to a given property

Computing the similarity between a model output and a reference.

LLM-Based-Judge have been used for the following points:

Objectivity when compared to humans: They automate empirical judgments in an objective and reproducible manner.

Scale and reproducibility: They are more scalable than human annotators, which allows to reproduce scoring on large amounts of data.

Cost: They are cheap to instantiate, as they don't require to train a new model, and can just rely on good prompting and an existing high quality LLM. They are also cheaper than paying actual human annotators.

Alignment with human judgments: They are somehow correlated with human judgments.

Although LLMs have superior reasoning capabilities that make them great candidates for evaluating LLM outputs, there are also downside to all of these:

LLM as judges seem objective, but they have many hidden biases that can be harder to detect than the ones in humans, since we're not as actively looking for them. Besides, LLM-prompting is not as robust yet. Using LLMs to evaluate LLMs has been compared to creating an echo-chamber effect, by reinforcing biases subtly.

They are indeed scalable, but contribute to creating massive amounts of data which themselves need to be examined to ensure their quality (for example, you can improve the quality of LLM-judges by asking them to generate a thinking trace, or reasoning around their data, which makes even more new artificial data to analyse)

They are indeed cheap to instantiate, but paying actual expert human annotators is likely to give you qualitatively better results for your specific use cases.

Challenge 2: Datasets/Testing cases are hard to keep comprehensive and adversarial

Preparing an Red Teaming dataset that covers all the edge cases that might appear in a production setting is difficult and hard to get the right task. Unfortunately, it is also a very time-consuming problem.

There are several core aspects that need to be considered in the design of the Red Teaming dataset/test cases:

Comprehensive coverage of data modalities.

Covering comprehensive data domains such as compliance, misuse, and high-risk security vulnerabilities.

Advanced data automatic synthesis and database variant methods.

Data pollution, once data on the internet is publicly available in plain text form, it will inevitably appear in the model training set due to data crawlers. So it is difficult to guarantee that the model has not really seen the test set during evaluation.

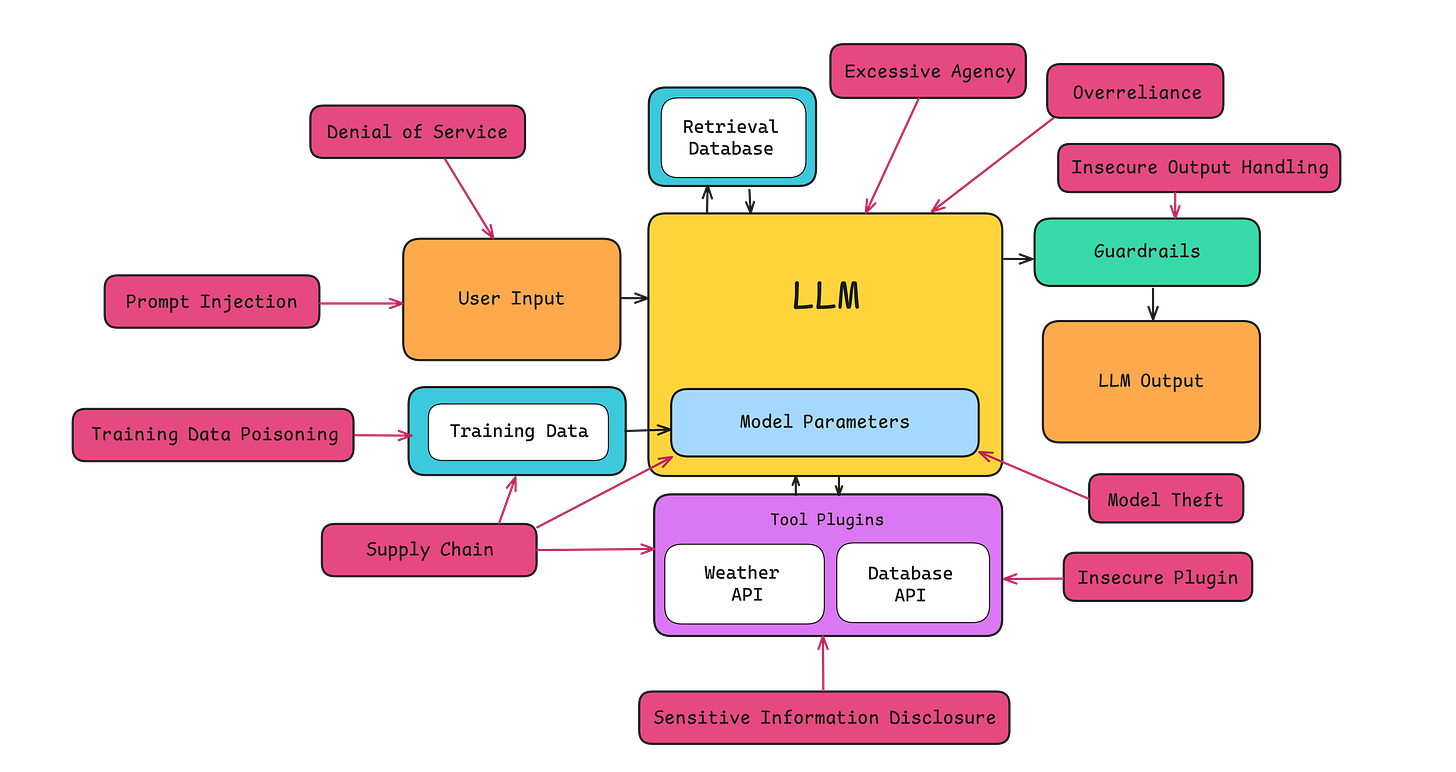

Challenge 3: Complex systems pipeline driven by AI are hard to conduct comprehensive testing

An LLM system refers to the complete setup that includes not only the LLM itself but also additional components such as function tool calling (for agents), retrieval systems (in RAG), response caching, etc., that makes LLMs useful for real-world applications.

For example, a text-to-SQL generator app would include embedding component, vector database, ranking algorithm, base model.

Testing an LLM system, is therefore not as straightforward as testing an LLM itself. While both LLMs and LLM systems receive and generate textual outputs, the fact that there can be several components working in conjunction in an LLM system means you should apply LLM evaluation metrics more granularly to evaluate different parts of an LLM system for maximum visibility into where things are going wrong (or right).

Challenge 4: LLMs are probabilistic machines

LLMs are probabilistic machines, which makes defining deterministic test cases inadequate.

Issue #1: Unlimited input/output possibilities lead to unlimited possible issues. When your model is responsible for “generating” something new, however, for better or worse, that something could theoretically be anything. Generative AI product owners don’t just need to think about the correctness of the model’s answer, but its safety, relevance, toxicity, brand alignment, presentation of hallucination, etc.

Issue #2: Data plane and control plane are difficult to separate. The way AI fuses the control plane and data plane of an application is a security concern that comes to fruition when (a) models are generative and (b) inference is exposed to the user. In “traditional” ML this isn’t too much of a problem because the model can effectively be treated as an extension of the data plane. Inputs and outputs are wrangled into a constrained, predefined format that result in one of a preset number of outcomes – an AV’s camera captures an image of a stoplight, the image is processed and sent to the model, the model detects a green light, the code tells the car to go. It is not just the architecture of the model that constrains the system, but also the interface. In a traditional application, SQL injections are a known attack path to breach the data plane from user input, and parallel concerns over prompt injections are on the rise for LLMs.

How TrustAI’s unique advantages in LLM Red Teaming

TrustRed is a commercial solution that providing a one-stop LLMs/AI system evaluation platform, which can be accessed by online SaaS or offline local SDK.

Essentially, TrustRed is a dynamic software testing system.

TrustEval's core features and differentiated advantages.

Industry leading security&safety assessment scope and dataset, covering 6 major domains and over 50+ subtypes. Based on cloud threat intelligence and AI research team, continuously organize and update the latest evaluation datasets, ensure that enterprises can continuously obtain the most objective perception of the security & safety performance of their model/apps.

With the continuous expansion of LLMs' application fields, their effective operation and performance evaluation have become increasingly complex. Initially, LLMs were designed to enhance traditional natural language processing tasks such as translation, summarization, and question answering, but now they have also shown great potential in multiple fields such as code generation and finance, with more application scenarios typically requiring more evaluation data.

Comprehensive evaluation metrics, relying on advanced LLMs in the cloud for automated judges for evaluation results, ensuring the effectiveness of evaluation reports.

Diversified integrate methods, compatible with HTTP API, static model repository, CI/CD integration, maximizing compatibility with convenience and data privacy requirements.

Continuously update the latest academic research results and community threat intelligence, incorporating automated attack modules based on research-backed techniques to test multiple LLM applications simultaneously.

We are fully committed to continuously improving and iterating our product to enhance its features and deliver an exceptional user experience. We warmly welcome you to try our product and share your valuable feedback, so we can work together to create an even better solution!

I am pleased to share two LLMs scanning reports generated automatically by the TrustRed. The content of the reports may no longer represent our latest work results. We are developing a new UI interface to display TrustRed's scanning results in real time and provide online evaluation. We hope to release them smoothly next month!😄

📚📚📚

Red Teaming Report of AI Security and Safety - Google.Gemini AI - 20241220.pdf

Password:62k76&14

Testing Report of AI Security and Safety - AWS.Bedrock - 20241209.pdf

Password:7i26359#

📚📚📚

Future of AI System Evaluation

As AI continues its relentless march forward, risk assessment is becoming increasingly critical for organisations looking to build and deploy AI responsibly. By establishing a robust risk assessment framework and mitigation plan, you can reduce the likelihood of AI-related incidents, earn trust with your customers, and reap benefits like improved reliability and fairness across different demographics.

Remember, in the world of AI, it's not just about being fast, it's about being fast and right. And that starts with understanding and managing your risks.

As organizations try to balance the powerful capabilities of LLMs with the importance of safeguarding AI System, we will all need to maintain a security-first mindset. By staying informed about emerging threats and integrating cutting-edge security solutions, businesses can harness the full potential of LLMs while ensuring robust protection and assessment against ever-evolving security risks. To find out more about how TrustEval can assist in enhancing your security measures for LLMs, Click here.

LLM Red Teaming frameworks and tools

TrustAI - TrustRed

TrustAI - TrustRed, a LLMs Safety and AI Application Security company, provides security solutions for artificial intelligence algorithms, models, and the data that power them.

With a first-of-its-kind, intelligent fuzz approach to conduct regression testing and securing AI, TrustAI is helping to protect the world’s most valuable technologies.

TrustAI - TrustRed was founded by AI professionals and security specialists with first-hand experience of how difficult adversarial AI attacks can be to detect and defend against. Determined to prove these attacks are preventable, the team developed a unique, patent-pending, productized AI security solution to help all organizations monitor risk and protect important property.

Amazon Bedrock

Amazon's entry into the LLM space – Amazon Bedrock – includes evaluation capabilities. It's particularly useful if you're deploying models on AWS. SuperAnnotate integrates with Bedrock, allowing you to build data pipelines using SuperAnnotate's editor and fine-tune models with Bedrock.

Auzre PyRIT

PyRIT (Python Risk Identification Toolkit for generative AI) is an open automation framework, to empower security professionals and machine learning engineers to proactively find risks in their generative AI systems.

Nvidia Nemo

Nvidia Nemo is a cloud-based microservice designed to automatically benchmark both state-of-the art foundation and also custom models. It evaluates them using a variety of benchmarks, which include those from academic sources, customer submissions, or using LLMs as judges.

HydroX AI

HydroX evaluation is a SaaS platform for AI model risk assessment.

LangSmith

Developed by Anthropic, LangSmith offers a range of evaluation tools specifically designed for language models. It's particularly strong in areas like bias detection and safety testing.

DSPy

DSPy is a framework for algorithmically optimizing LM prompts and weights, especially when LMs are used one or more times within a pipeline.

Inspect

Inspect is a framework for large language model evaluations.

Project Moonshot

Project Moonshot is a LLM Evaluation Toolkit.

Promptfoo

promptfoo test your LLM app locally

Garak

garak is a generative AI Red-teaming & Assessment Kit

Mindgard

Mindgard is a SaaS platform for AI model risk assessment.

ParlAI

ParlAI is a framework for training and evaluating AI models on a variety of openly available dialogue datasets

DeepEval

Deep Eval is an open-source library that offers a wide range of evaluation metrics and is designed to be easily integratedinto existing ML pipelines.

References

https://huggingface.co/blog/clefourrier/llm-evaluation

https://github.com/huggingface/evaluation-guidebook/blob/main/contents/automated-benchmarks/some-evaluation-datasets.md

https://github.com/huggingface/lighteval/wiki/Metric-List

https://github.com/huggingface/evaluation-guidebook/blob/main/contents/model-as-a-judge/basics.md

https://github.com/huggingface/evaluation-guidebook/blob/main/translations/zh/contents/automated-benchmarks/designing-your-automatic-evaluation.md