How to Secure AI Business Models

Introduction

Industry leaders in various industries are facing a generative AI challenge. As their organizations experiment with this transformative technology which can drive massive productivity gains across the enterprise. But in other side, this new technology may must contain the potential risks and threats. These risks and threats include everything from accidental data leakage to hackers manipulating the AI to perform malicious tasks.

About 48% of executives expect nearly half of their staff to use generative AI to augment their daily tasks in the past year. Yet nearly all business leaders (96%) say that adopting this technology makes a security breach in their organization likely within the next 3 years. With the average cost of a data breach reaching USD 4.45 million globally last year, companies need to reduce risks, not increase them.

Compounding the challenge, hackers are expected to adopt generative AI for the same speed, scale and sophistication it offers enterprises. With it, hackers can create better targeted phishing emails, mimic trusted users’ voices, create malware and steal data.

Fortunately, enterprise leaders also can use generative AI tools to secure data and users and detect and thwart potential attacks.

We created this guide to help you navigate the challenges and tap into the resilience of generative AI. We explore the ways attackers might use generative AI against you and how you can better protect yourself by using these technologies. Finally, we provide a framework to help you secure AI training data, models and applications across your enterprise.

How cybercriminals will use generative AI?

Generative AI will likely benefit attackers in the same way it benefits enterprises by providing them with speed, scale, precision and sophistication. It will also upskill newcomers who may lack technical expertise, lowering the bar so that even novice hackers could launch malicious phishing and malware campaigns on a global scale.

In preparing to meet these threats, you should consider the two primary avenues that cybersecurity researchers see for generative AI-related attacks.

Attacking your organization

Attacking your AI

Attacking your organization

Using large language models (LLMs), cybercriminals can conduct more attacks faster, from email phishing campaigns to the creation of malware code. Though these threats aren’t new, the speed and scale hackers can gain from offloading manual tasks to LLMs could overwhelm cybersecurity teams that are already facing an ongoing labor and skills shortage.

AI-engineered phishing: Researchers have shown that generative AI can be prompted to create realistic phishing emails within 5 minutes. They also found these emails to be nearly as effective as those created by someone who is experienced at social engineering phishing attacks. The emails are so convincing that they can challenge even the most wellprepared organizations.

More phishing, more clicks: Though an AI-engineered phish aims to achieve the same goal as one crafted by a human, it becomes a tool that lets attackers speed up and multiply their phishing campaigns. This scenario creates a higher chance that users will mistakenly click a malicious email.

Targeted phishing: Attackers can use generative AI chatbots to study their victims’ online profiles, gaining valuable insights into their targets’ lives. These chatbots can also generate highly persuasive phishing emails that mimic the targets’ own style of language.

Deepfake audio: Cybersecurity leaders are concerned over the threat of generative AI audio deepfakes. In these attacks, criminals could feed recordings of a speaker’s voice obtained online into an LLM that could generate whatever audio they want. For example, they could use a company CEO’s voice to leave the CFO a message instructing them to pay a bogus invoice to an attacker-controlled bank account. The term for this new method of attack is Phishing 3.0, in which what you hear seems legitimate but isn’t.

Attacking your AI

Criminal hackers can try to attack enterprise AI models, in effect using your AI against you.

They could “poison” your AI by injecting malicious training data into the AI models and forcing the AI to do what they want. For instance, they could cause your AI to make incorrect predictions about supply chains, or spew hatred over chatbots. They could “jailbreak” your LLMs by using language-based prompts to make them leak confidential financial information, create vulnerable software code and offer faulty cybersecurity response recommendations to your security analysts.

Poisoning your AI: Turning your AI against you may be the highest aspiration of cybercriminals. It can be tricky to pull off but not impossible. By poisoning the data used to train an LLM, an attacker can make it malfunction or behave maliciously without being detected. The impact of a successful attack could range from creating disinformation to launching a cyberattack on critical infrastructure. These attacks, however, require a hacker to have access to training data. If that data is closed, trusted and secured, these attacks can be difficult to execute. But if your AI models are trained on open-source data sets, then the bar to poisoning your AI is much lower.

Jailbreaking your LLMs: With LLMs, English has essentially become a programming language. Rather than mastering traditional programming languages, such as Python and Java, to create malware to damage computing systems, attackers can use natural language prompts to command an LLM to do what they want. Even with guardrails in place, attackers can use these prompts to bypass or jailbreak safety and moderation features on your AI model. The result of jailbreaking is include “Leak confidential financial information about other users”、”Create vulnerable and malicious code”、”Offer poor security recommendations”.

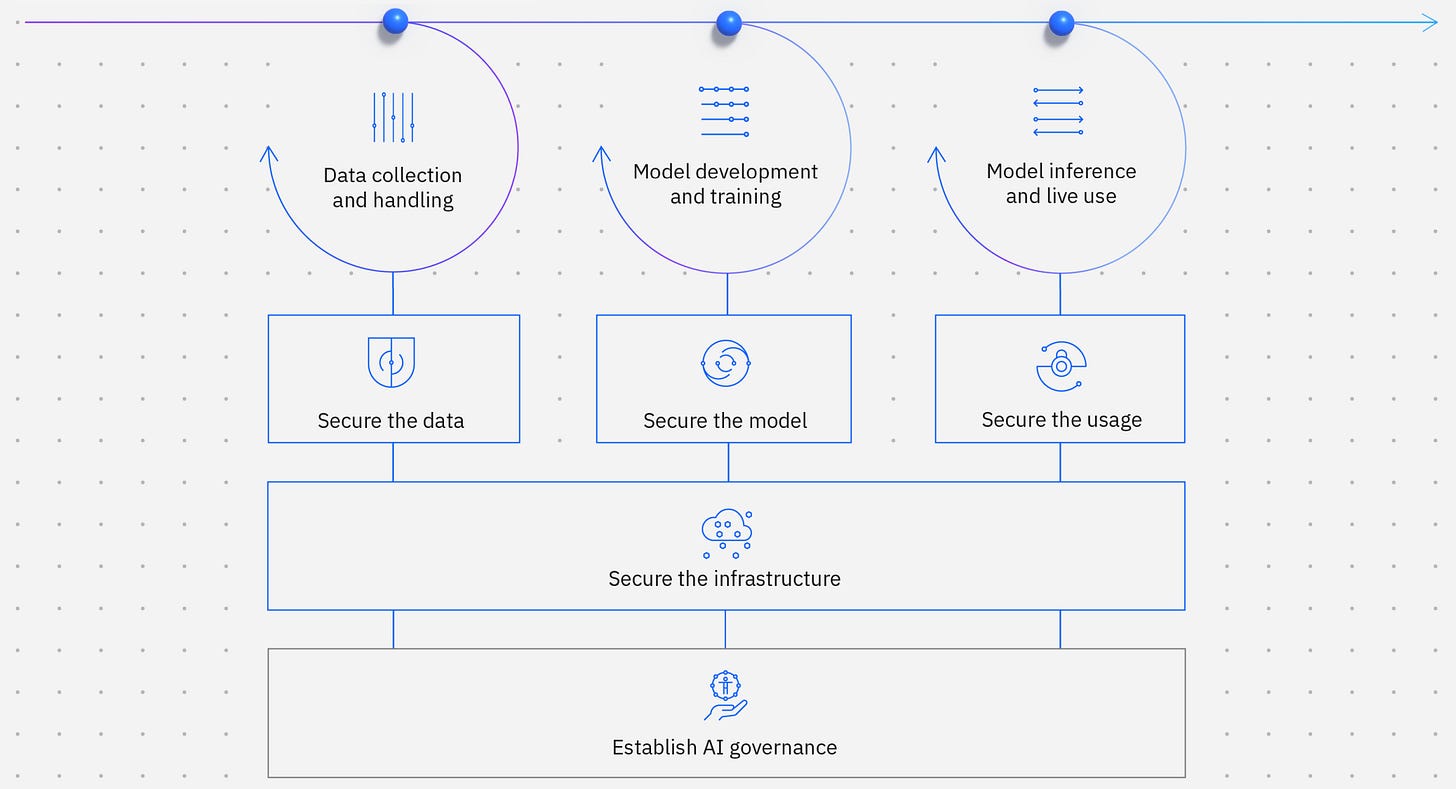

Security for AI framework

As AI adoption scales and innovations evolve, cybersecurity guidance will mature. A framework for protecting trusted foundation models, generative AI and the data sets on which it’s built will be essential for enterprise-ready AI. Here are some best governance and technical practices that you should share with your security teams.

The following figure shows the various risk surface and mitigation that enterprises should pay attention to from the overall perspective of the AI framework.

Secure the data

Criminal hackers can try to attack enterprise AI models, in effect using your AI against you. They could “poison” your AI by injecting malicious training data into the AI models and forcing the AI to do what they want.

Turning your AI against you may be the highest aspiration of cybercriminals. It can be tricky to pull off but not impossible. By poisoning the data used to train an LLM, an attacker can make it malfunction or behave maliciously—without being detected. The impact of a successful attack could range from creating disinformation to launching a cyberattack on critical infrastructure. These attacks, however, require a hacker to have access to training data. If that data is closed, trusted and secured, these attacks can be difficult to execute. But if your AI models are trained on open-source data sets, then the bar to poisoning your AI is much lower.

Another risk is called LLM Model Theft or LLM Model Inversion, common methods include black-box attacks, where an attacker sends numerous queries to the model and collects the response data, subsequently using that data to train a new model that mimics the behavior of the original one.

To protect AI training data from theft, manipulation and compliance violations,

Use data discovery and classification to detect sensitive data used in training or fine-tuning.

Implement data security controls across encryption, access management and compliance monitoring.

Use data loss prevention techniques to prevent sensitive personal information (SPI), personally identifiable information (PII) and regulated data leakage through prompts and application programming interfaces (APIs).

Secure the model

This risk basically is a software supply chain risk, businesses might be building and innovating on erroneous code assembled through open-source and commercial software components bought from vendors. Common failures and potentially exploitable software flaws across industries could create a new level of exposure.

The best practice to secure the model is about securing AI model development by scanning for vulnerabilities in the pipeline, hardening integrations, and enforcing policies and access.

Continuously scan for vulnerabilities, malware and corruption across the AI and ML pipeline.

Discover and harden API and plug-in integrations to third-party models.

Configure and enforce policies, controls and role-based access control (RBAC) around ML models, artifacts and data sets.

Secure the usage

Secure the usage of AI models by detecting data or prompt leakage and alerting on evasion, poisoning, extraction or inference attacks.

Monitor for malicious inputs, such as prompt injections and outputs containing sensitive data or inappropriate content.

Implement AI security solutions that can detect and respond to AI-specific attacks, such as data poisoning, model evasion and model extraction.

Develop response playbooks to deny access, and quarantine and disconnect compromised models.

Secure the infrastructure

Extend your existing cybersecurity policies and solutions, including threat detection and response, data security, and identity fraud and device management across your underlying AI infrastructure.

Deploy infrastructure security controls as a first line of defense against adversarial access to AI.

Use existing expertise to optimize security, privacy and compliance standards across distributed environments.

Harden network security, access control, data encryption, and intrusion detection and prevention around AI environments.

Invest in new security defenses specifically designed to protect AI.

Establish AI governance

Building or buying trustworthy AI requires an AI governance framework that helps you direct, manage and monitor your organization’s AI activities. The framework will strengthen your ability to mitigate risk, manage regulatory requirements and address ethical concerns, regardless of your existing data science platform.

Enable responsible, explainable, highquality and trustworthy AI models, and automatically document model lineage and metadata.

Monitor for fairness, bias and drift to detect the need for model retraining.

Use protections and validation to help enable models that are fair, transparent and compliant.

Document model facts automatically in support of audits.

Automate and consolidate multiple tools, applications and platforms while documenting the origin of data sets, models, associated metadata and pipelines.

Get “Safety Alignment” for your AI applications with TrustAI Solutions

Ready to harness the power of your AI Applications? TrustAI is your one-stop platform for protecting LLMs. Validating inputs, filtering the output, closely monitoring LLM activity, and more, all in one place.

Secure your LLMs with TrustAI today.

Feel free to get a Online Demo.