Google Chrome endpoint-LLM experience

Background

When we build features with AI models on the web, we often rely on server-side solutions for larger models. This is especially true for generative AI, where even the smallest models are about thousand times bigger than the median web page size. It's also true for other AI use cases, where models can range from 10s to 100s of megabytes.

While server-side AI is a great option for large models, on-device and hybrid approaches have their own compelling upsides.

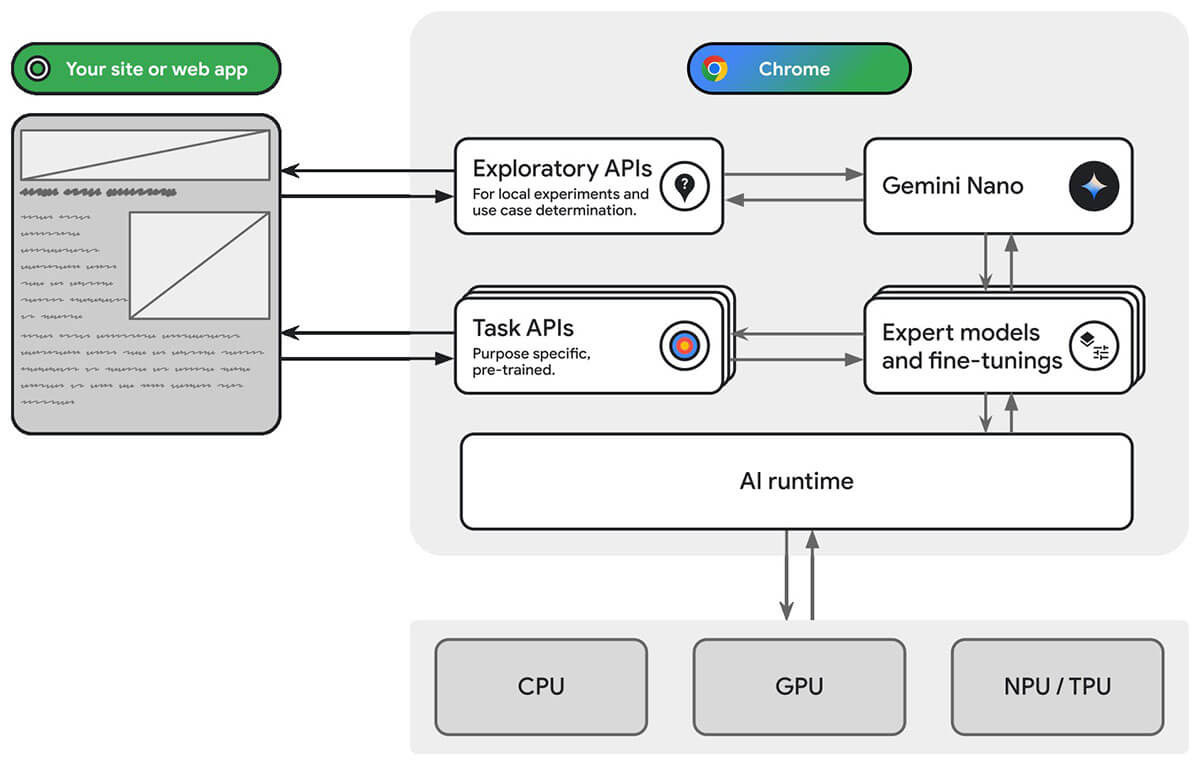

That's why we're developing web platform APIs and browser features designed to integrate AI models, including large language models (LLMs), directly into the browser. This includes Gemini Nano, the most efficient version of the Gemini family of LLMs, designed to run locally on most modern desktop and laptop computers. With built-in AI, your website or web application can perform AI-powered tasks without needing to deploy or manage its own AI models.

With built-in AI, your browser provides and manages foundation and expert models.

As compared to do it yourself on-device AI, built-in AI offers the following benefits:

Ease of deployment: As the browser distributes the models, it takes into account the capability of the device and manages updates to the model. This means you aren't responsible for downloading or updating large models over a network. You don't have to solve for storage eviction, runtime memory budget, serving costs, and other challenges.

Access to hardware acceleration: The browser's AI runtime is optimized to make the most out of the available hardware, be it a GPU, an NPU, or falling back to the CPU. Consequently, your app can get the best performance on each device.

How to startup

Join the early preview program to provide feedback on early-stage built-in AI ideas, and discover opportunities to test in-progress APIs through local prototyping.

Download the latest 127 version of Chrome dev:google.com/chrome/dev/

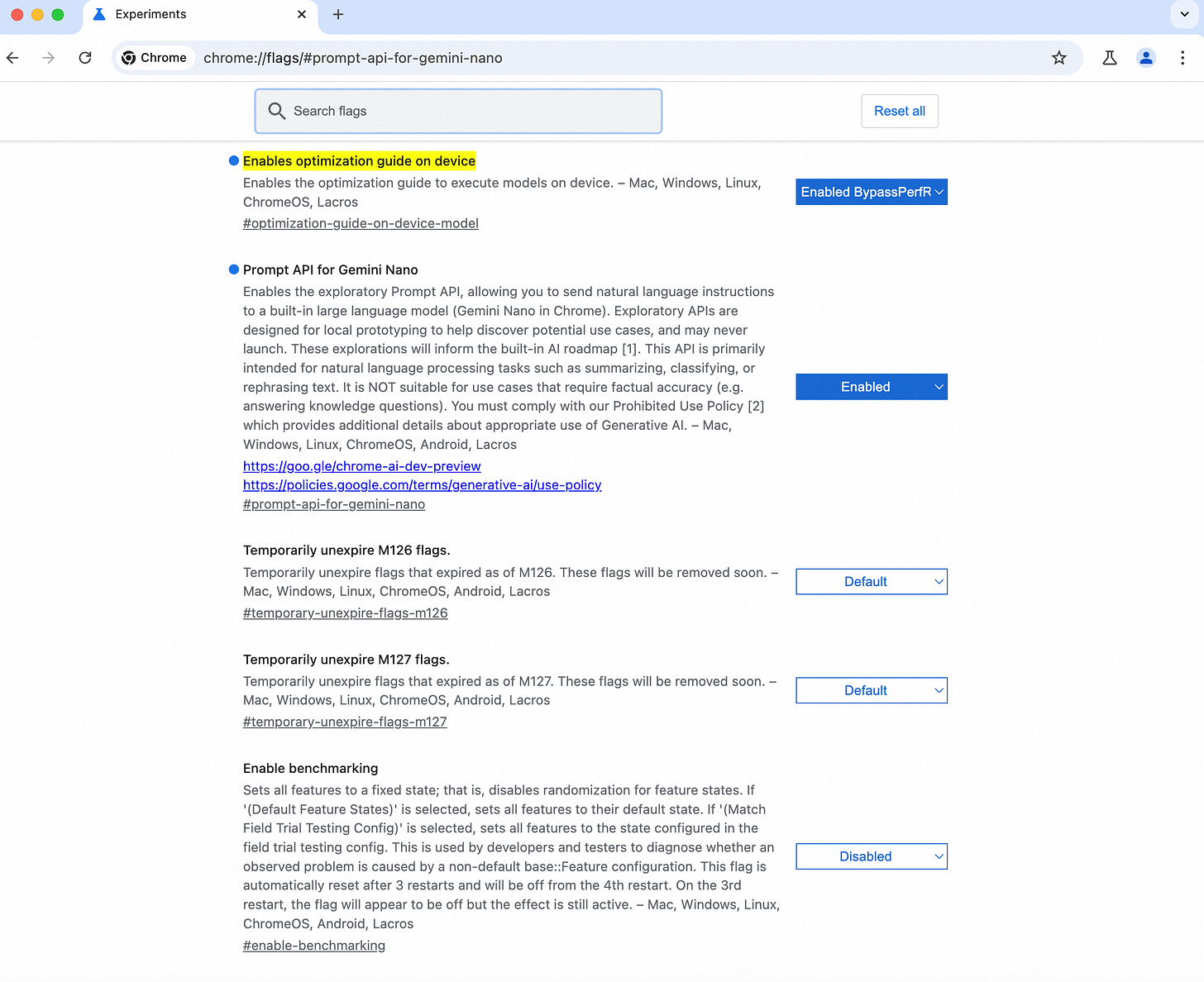

Open:chrome://flags/#optimization-guide-on-device-model

Choose:Enabled BypassPerfRequirement

Open:chrome://flags/#prompt-api-for-gemini-nano

Choose:Enabled

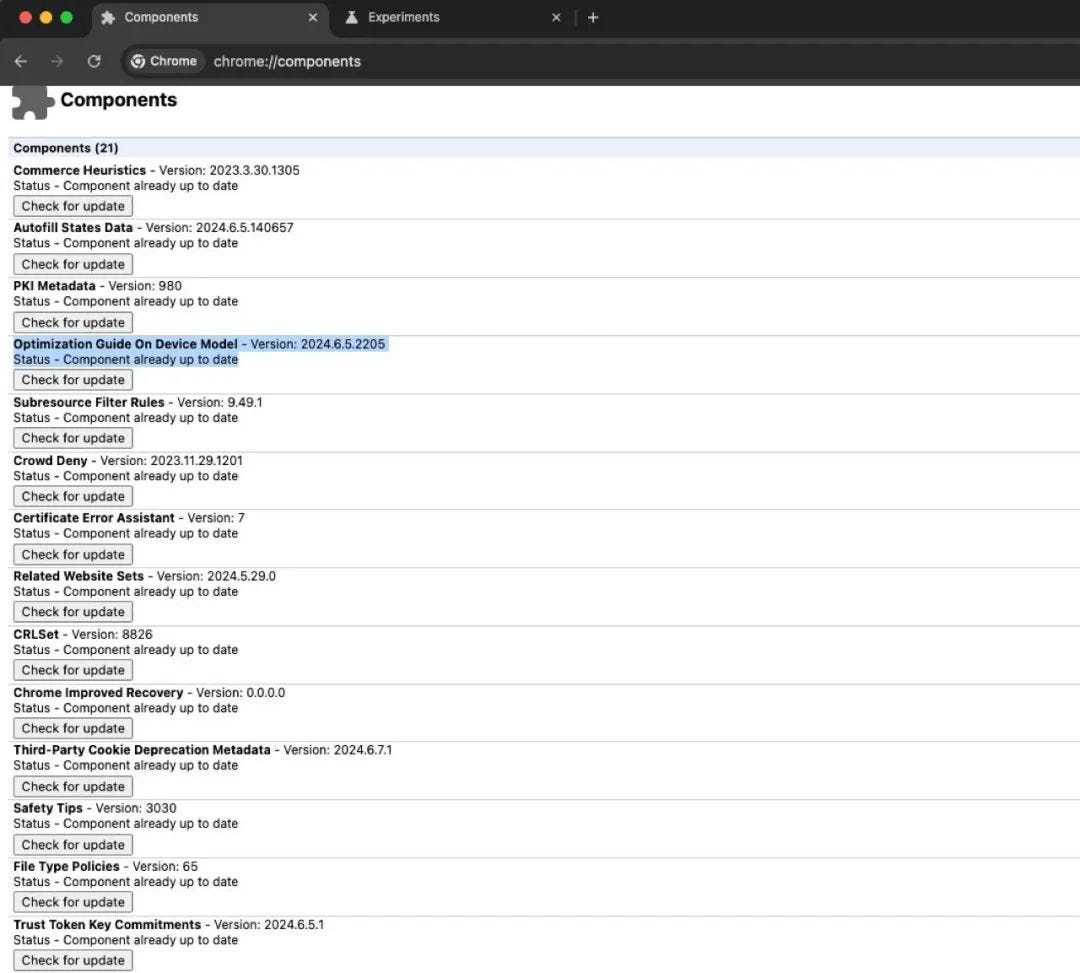

Waiting for the model download to complete, you can proceed to chrome://components/ to Check Download Status.

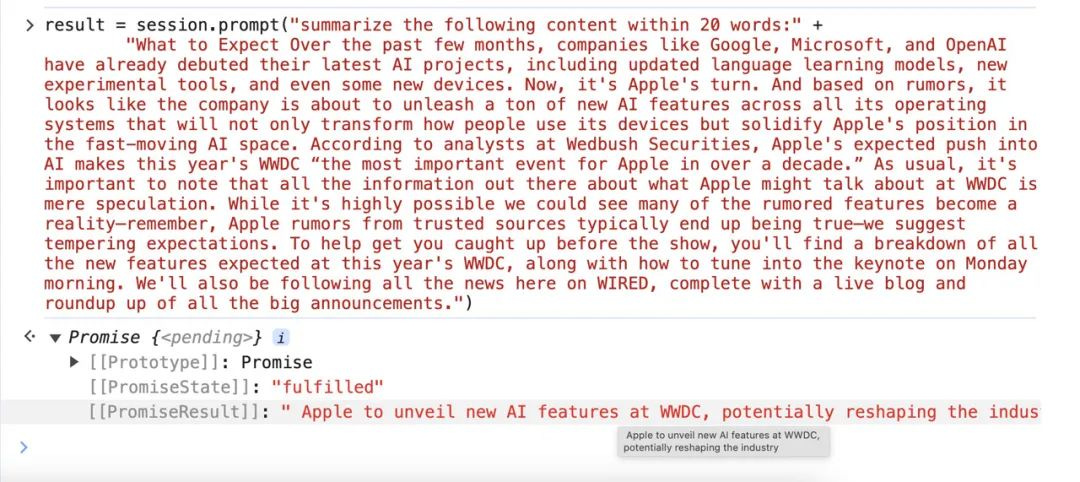

Open the console and enter:

window.model.createTextSession()Get started with the Gemini API

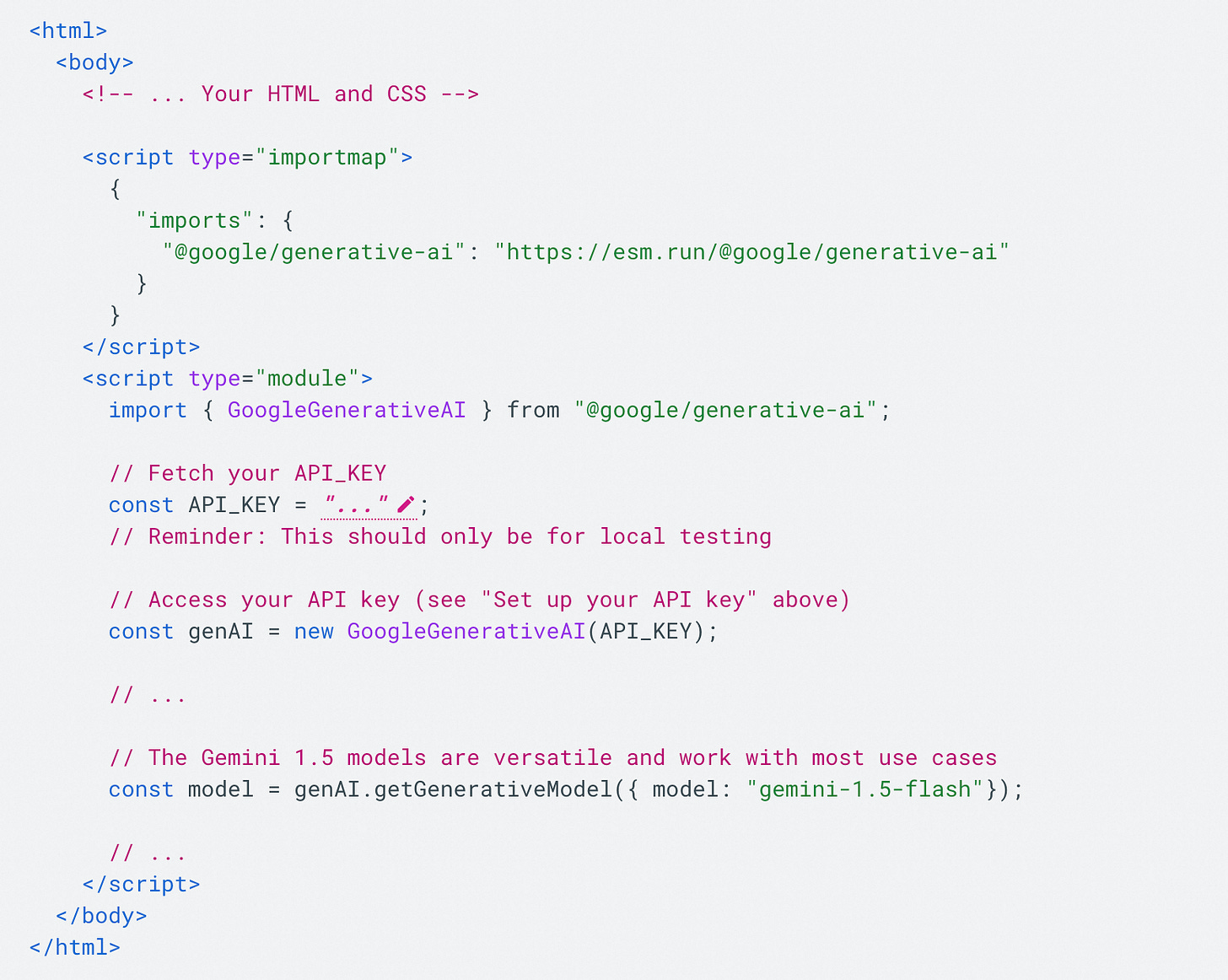

Before calling the Gemini API, you need to set up your project, which includes obtaining an API key, importing the SDK, and initializing the model.

Before you can make any API calls, you need to import the SDK and initialize the generative model.

When the prompt input includes only text, use a Gemini 1.5 model or the Gemini 1.0 Pro model with generateContent to generate text output: