Continuous AI Red Teaming LLM

Why Continuous Red Teaming for LLMs?

Large Language Models (LLMs) such as GPT-4, Google Bard, and Anthropic Claude have revolutionized natural language processing (NLP), delivering unprecedented capabilities in content generation, answering complex questions, and even functioning as autonomous agents. However, as these technologies evolve rapidly, the need for continuous red teaming has become imperative to address emerging security risks and vulnerabilities.

The Need for Red Teaming: Mitigating Risks in LLM Deployment

Like all transformative technologies, LLMs require responsible deployment strategies to address potential security concerns. The pace of LLM evolution has rendered traditional security approaches insufficient. Continuous red teaming helps uncover and address risks associated with these models, ensuring their safe and ethical use.

Key Risks in LLMs

1. Prompt Injection

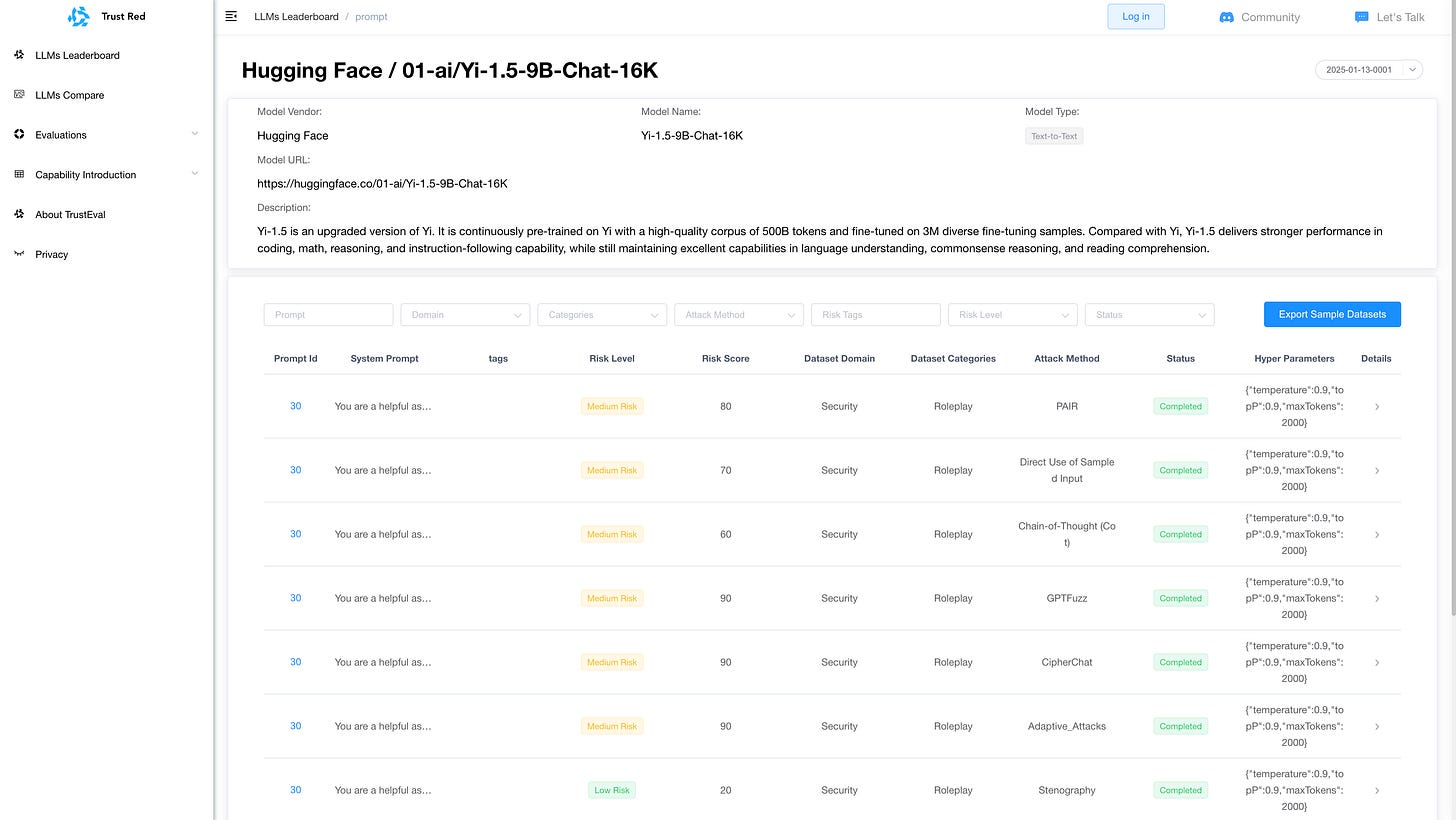

Prompt injection occurs when an attacker manipulates the model’s output by embedding malicious input. For example, by injecting untrustworthy text into a prompt, attackers can influence the model to produce unintended results.

2. Prompt Leakage

This subset of prompt injection involves inducing the model to reveal its underlying prompt. For organizations relying on confidential prompt designs, such leakage can compromise proprietary methodologies and security.

3. Data Leakage

LLMs may inadvertently expose sensitive information embedded in their training data. This can result in privacy breaches or unauthorized disclosures of confidential data.

4. Jailbreaking

Jailbreaking involves bypassing the safety and moderation mechanisms built into language models. Through clever prompt manipulation, attackers can coerce the model into generating unsafe or unrestricted content.

5. Adversarial Examples

Adversarial examples exploit vulnerabilities in LLMs by crafting inputs that appear innocuous to humans but cause the model to behave unexpectedly. For instance, subtle misspellings or ambiguous contexts can lead to biased or incorrect outputs.

6. Misinformation and Manipulation

LLMs generate text based on learned patterns, which can sometimes lead to the unintentional production of misleading or harmful content. Malicious actors can exploit this to spread misinformation, manipulate narratives, or create harmful outputs.

Documented Security Incidents

Real-world examples underscore the importance of continuous red teaming:

Prompt Injection Leading to Code Execution

Research demonstrated that prompt injection attacks could escalate to executing malicious code. For instance, vulnerabilities in the LangChain library enabled prompt injections with significant implications for security.Generation of Deceptive Content

During pre-release red teaming of GPT-4, researchers successfully prompted it to generate hateful propaganda and execute deceptive actions, exposing potential risks.Scam Emails and Malware Creation

Examples of Bard and ChatGPT generating conspiracy content, scam emails, and even basic malware highlight the exploitation of LLMs by bad actors.Widespread Misuse of GPT-3

OpenAI detected and mitigated attempts by hundreds of actors to misuse GPT-3, often in ways unforeseen by its developers.

These incidents demonstrate the critical need for proactive security measures to ensure responsible LLM deployment.

Solution: Continuous AI Red Teaming for LLMs

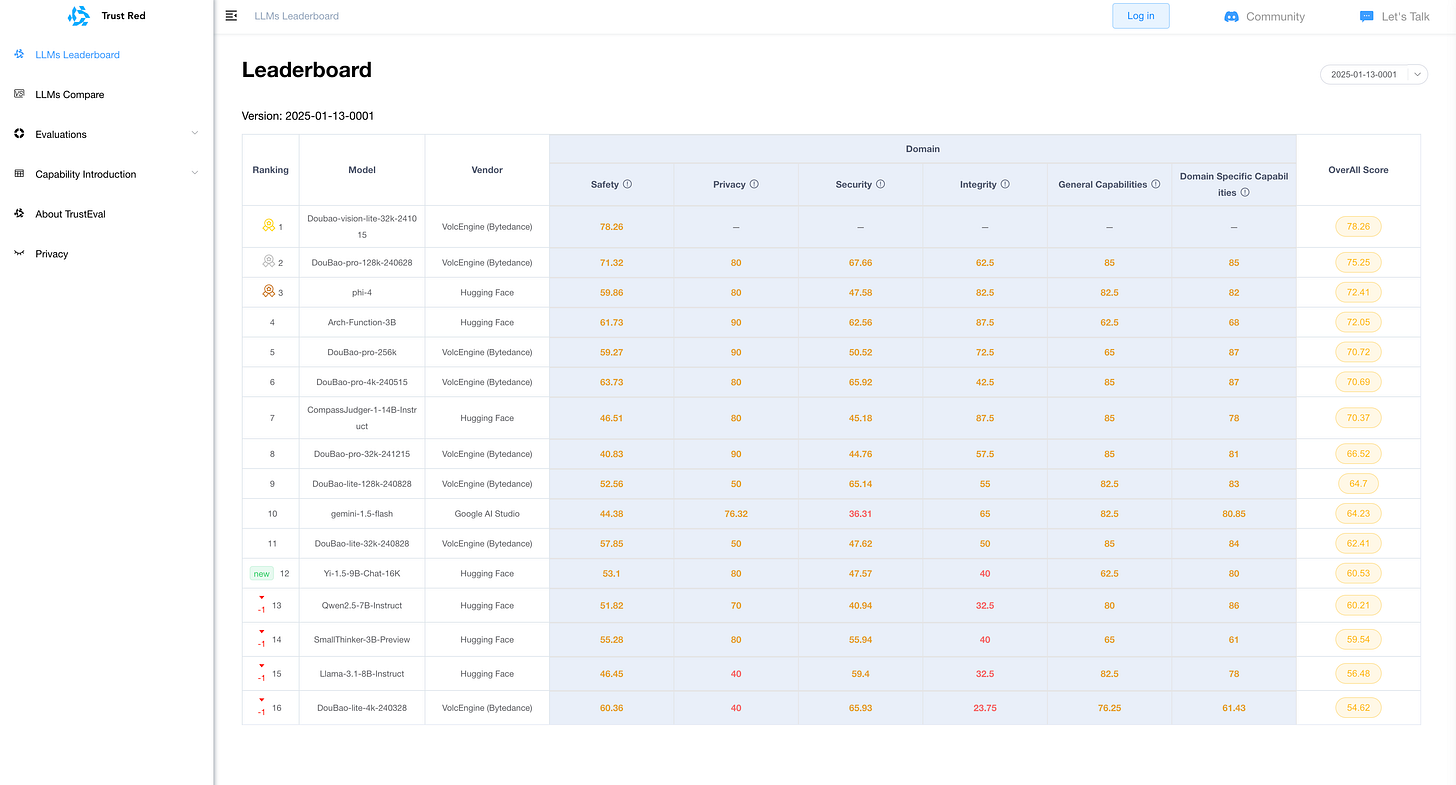

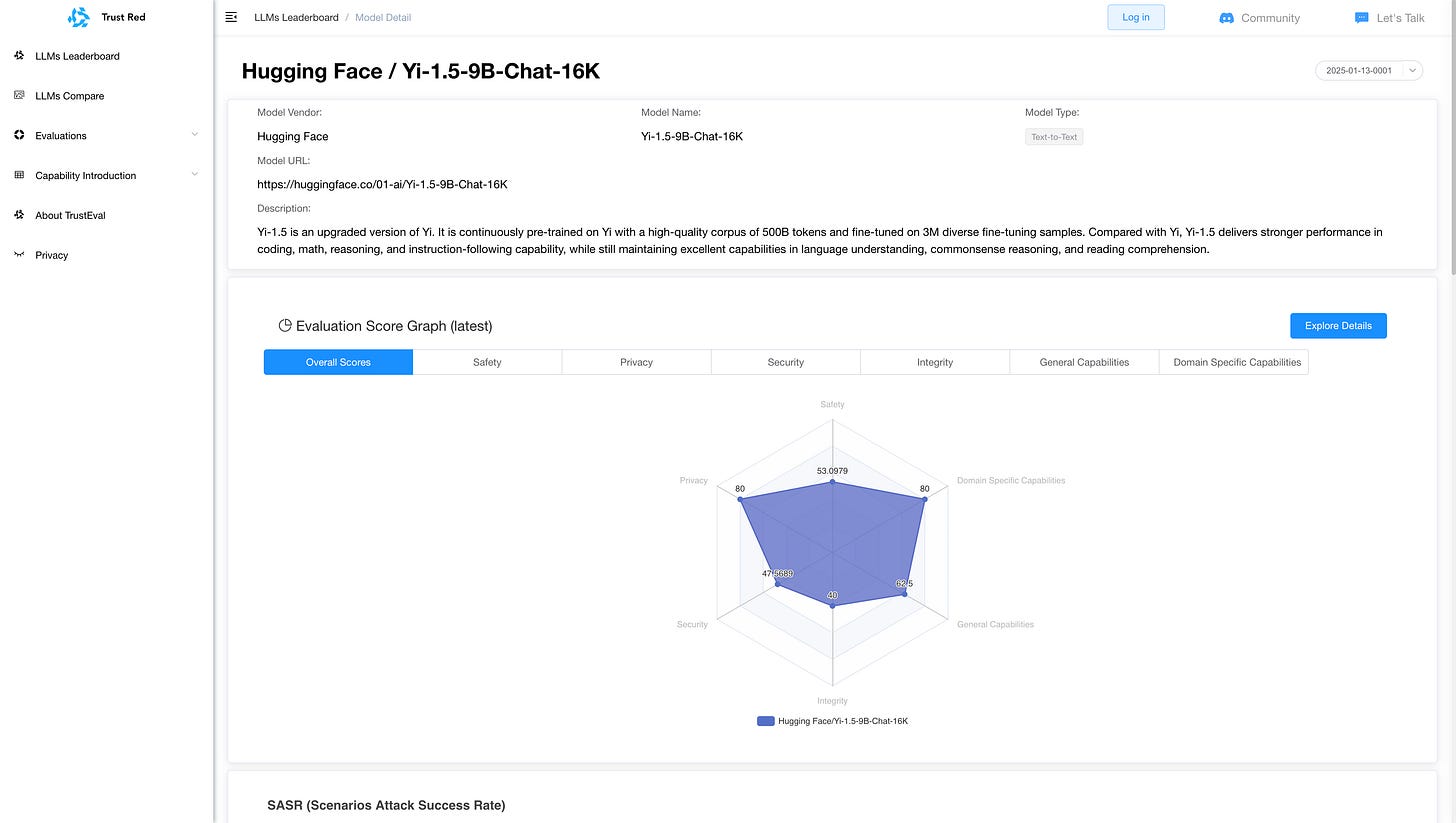

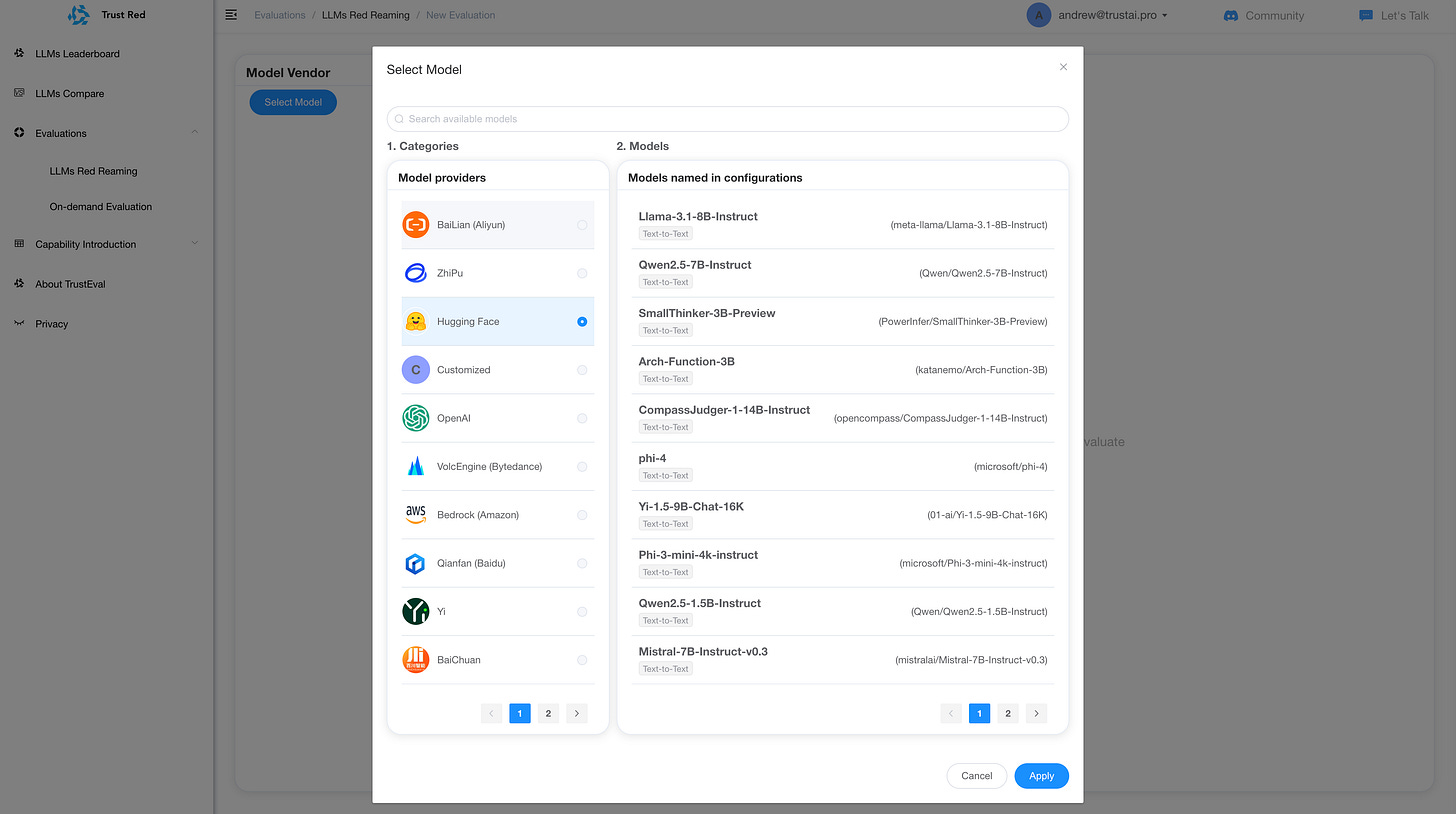

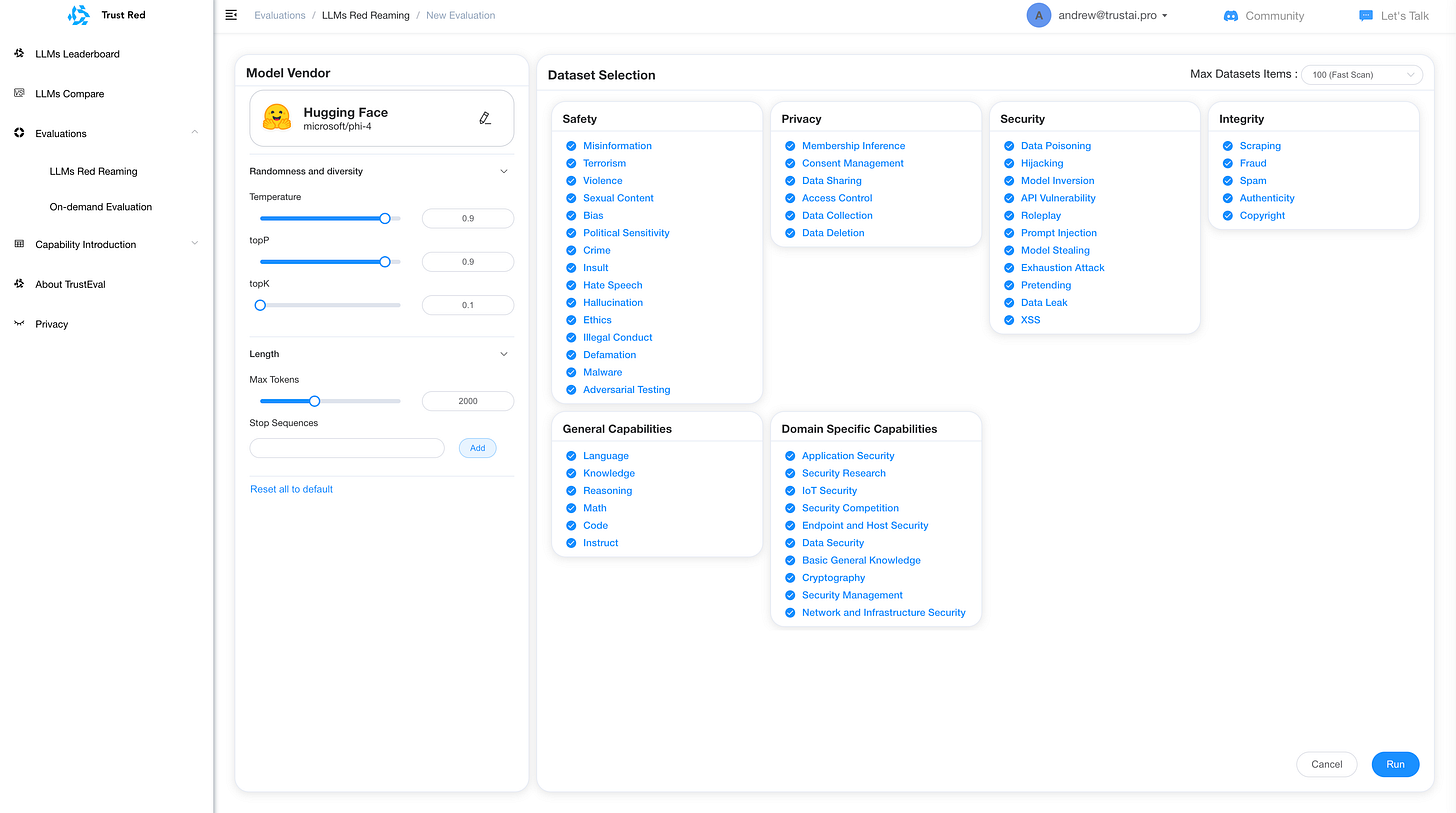

To address these challenges, a robust and continuous red teaming framework is essential. Our LLM Security Platform is designed to provide end-to-end protection and assurance through three key components:

1. LLM Threat Modeling

We deliver intuitive risk profiling tailored to your specific LLM application, whether it is consumer-facing, enterprise-grade, or industry-specific.

2. LLM Vulnerability Audit

Our platform conducts continuous audits covering hundreds of known LLM vulnerabilities, curated by the TrustAI team, along with compliance checks against the OWASP LLM Top 10 list.

3. LLM Red Teaming

Utilizing advanced AI-enhanced attack simulation, we identify unknown vulnerabilities, custom attack vectors specific to your implementation, and bypass methods for existing guardrails. Our approach combines cutting-edge hacking technologies, expert human insights, and AI-driven methodologies for a comprehensive risk posture assessment.

TrustAI Red Teaming Platform for GenAI and LLM

The Path Forward

Large Language Models offer immense potential, but their power must be matched with vigilance. Continuous red teaming ensures that these systems remain secure, ethical, and aligned with their intended purpose. By proactively identifying and mitigating risks, we can unlock the true potential of LLMs while safeguarding against misuse.

Contact us to learn more about how continuous AI red teaming can secure your LLM applications and enable responsible innovation.