Artificial Intelligence: The new attack surface

Background

Anytime something new comes along, there's always going to be somebody that tries to break it. AI is no different and this is why it seems we can't have nice things.

In fact, we've already seen more than 6000 research papers, exponential growth, that have been published related to adversarial AI examples.

Now in this article, we're going to take a look at six different types of attacks about AI and try to understand them better.

Prompt Injection Attacks

You might have heard of a SQL injection attack. When we're talking about an AI, well, we have prompt injection attacks.

What does a prompt injection attack involve? Well, think of it as sort of like a social engineering of the AI. Attacker will convince LLM to do things it shouldn't do. Sometimes it's referred to as jailbreaking,

The attackers basically do this in one of two ways.

There's a direct injection attack, where they send a command into the AI and tells it to do something: “pretend that this is the case”, or, “I want you to play a game that looks like this: I want you to give me all wrong answers.”. And now it starts operating out of the context that the LLM originally intended it to, and that can affect the output.

Another example of this is an indirect attack where the AI is designed to go out and retrieve information from an external source, maybe a web page. And in that web page, attackers have embedded an injection attack. That then gets consumed by the AI, and it starts following those instructions.

In fact, we believe this is probably the number one set of attacks against large language models, according to the OWASP report.

Infection Attacks

So we know that you can infect a computing system with malware. In fact, you can infect an AI system with malware as well.

In fact, you could use things like Trojan horses or backdoors in LLM model, which come from your supply chain.

And if you think about this, most people are never going to build a large language model because it's too compute intensive, requires a lot of expertise and a lot of resources. So most of the developers are going to download these models from other sources.

And what if someone in that supply chain has infected one of those models? The model then could be suspect. It could do things that we don't intended to do.

Evasion Attacks

Another type of attack class is something called evasion. And in evasion Attackers are basically modifying the inputs into the AI to make it come up with results that we were not wanting.

An example of this attack that has been cited in many cases was called “stop sign evasion attack”, where someone was using a self-driving car or a vision related system that was designed to recognize street signs. And normally it would recognize the stop sign. But someone came along and put a small sticker, something that would not confuse you or me, but it confused the AI massively to the point where it thought it was not looking at a stop sign. It thought it was looking at a speed limit sign, which is a big difference and a big problem if you were in a self-driving car that can't figure out the difference between those two.

So sometimes the AI can be fooled and that's an evasion attack in that case.

Poisoning Attacks

Another type of attack class is poisoning. Attackers poison the data that's going into the AI. And this can be done intentionally by someone who has bad purposes in mind.

In this case, if you think about our data that we're going to use to train the AI, we've got lots and lots of data and sometimes introducing just a small error, a small factual error, into the data is all it takes in order to get bad results. In fact, there was one research study that came out and found that as little as 0.001% of error introduced in the training data for an AI was enough to cause results to be anomalous and be wrong.

Extraction Attacks

Another class of attack is what we refer to as extraction.

Think about the AI system that we built and the valuable information that's in it. So we've got in this system, potentially intellectual property that's valuable to our organization. We've got data that we maybe used to train and tune the models that are in here. We might have even built a model ourselves, and all of these things we consider to be valuable assets to the organization.

So what if someone decided they just wanted to steal all of that stuff?

Well, one thing attackers could do is a set of extensive queries into the system, they maybe ask it a little bit and get a little bit of information each time. And they keep getting more and more information sort of slow and low below the radar. In enough time, they can built their own database, and basically lifted your model and stolen your IP, extracted it from your AI.

Denial of Service Attacks

And the final class of attack that I want to discuss is denial of service.

This is basically just overwhelm the system. Attackers send too many requests into the AI system and the whole thing goes boom. When this happened, the AI system cannot keep up and therefore it denies access to all the other legitimate users.

Panoramic View of attack surface for AI

Now, I hope you understand that AI is the new attack surface. We need to be smart so that we can guard against these new threats.

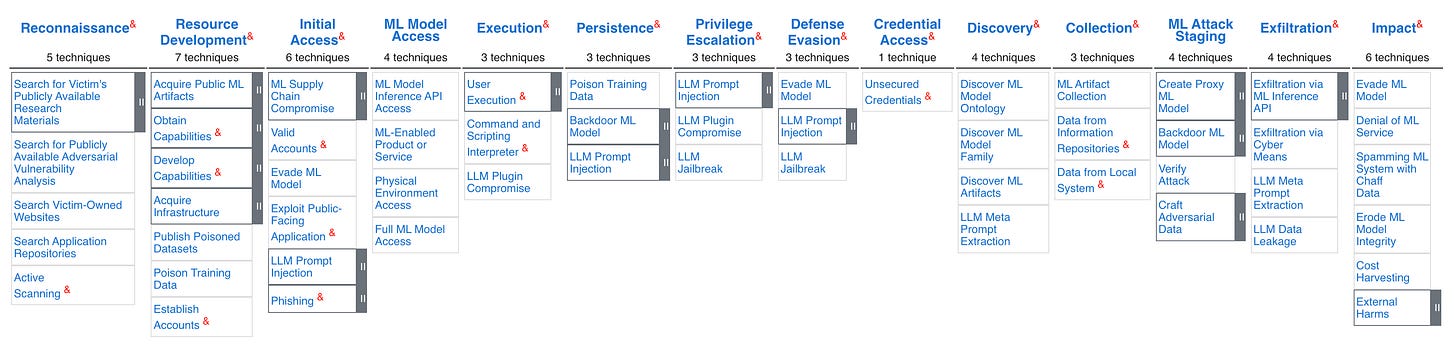

And I'm going to recommend more resources for you. The ATLAS Matrix provides a more macro and panoramic perspective so that you can understand the AI risks your business may face from a macro level.

The ATLAS Matrix shows the progression of tactics used in attacks as columns from left to right. View the ATLAS matrix highlighted alongside ATT&CK Enterprise techniques on the ATLAS Navigator.