AI Model Context Protocol (MCP) Security Considerations and Checklist

The Model Context Protocol (MCP) is an open standard that provides a universal way to connect AI models and agentic applications to various data sources and tools. It's how AI applications and applications can supply context (documents, database records, API data, web search results, etc.) to AI applications. This capability is very powerful, but it also raises important cybersecurity-related questions.

When AI assistants gain access to sensitive files, databases, or services via MCP, organizations must ensure those interactions are secure, authenticated, and auditable.

The MCP architecture (host, client, servers) inherently creates defined points where security controls can be applied. Let’s explore how MCP can be leveraged for security purposes (from securing model interactions and logging their actions, to guarding against adversarial inputs and ensuring compliance with data protection).

Overview of MCP and Its Security Architecture

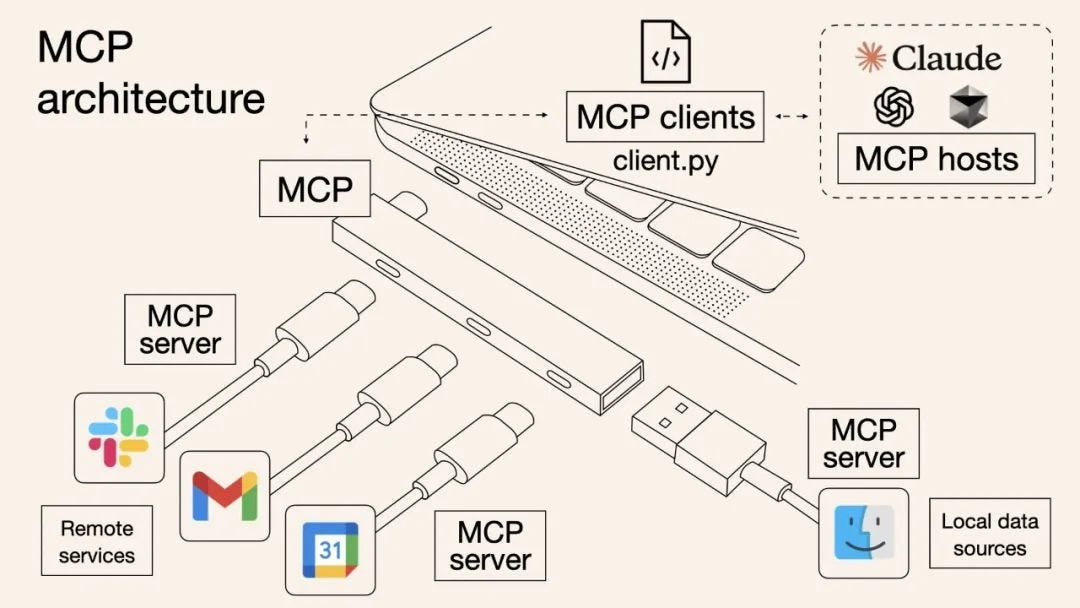

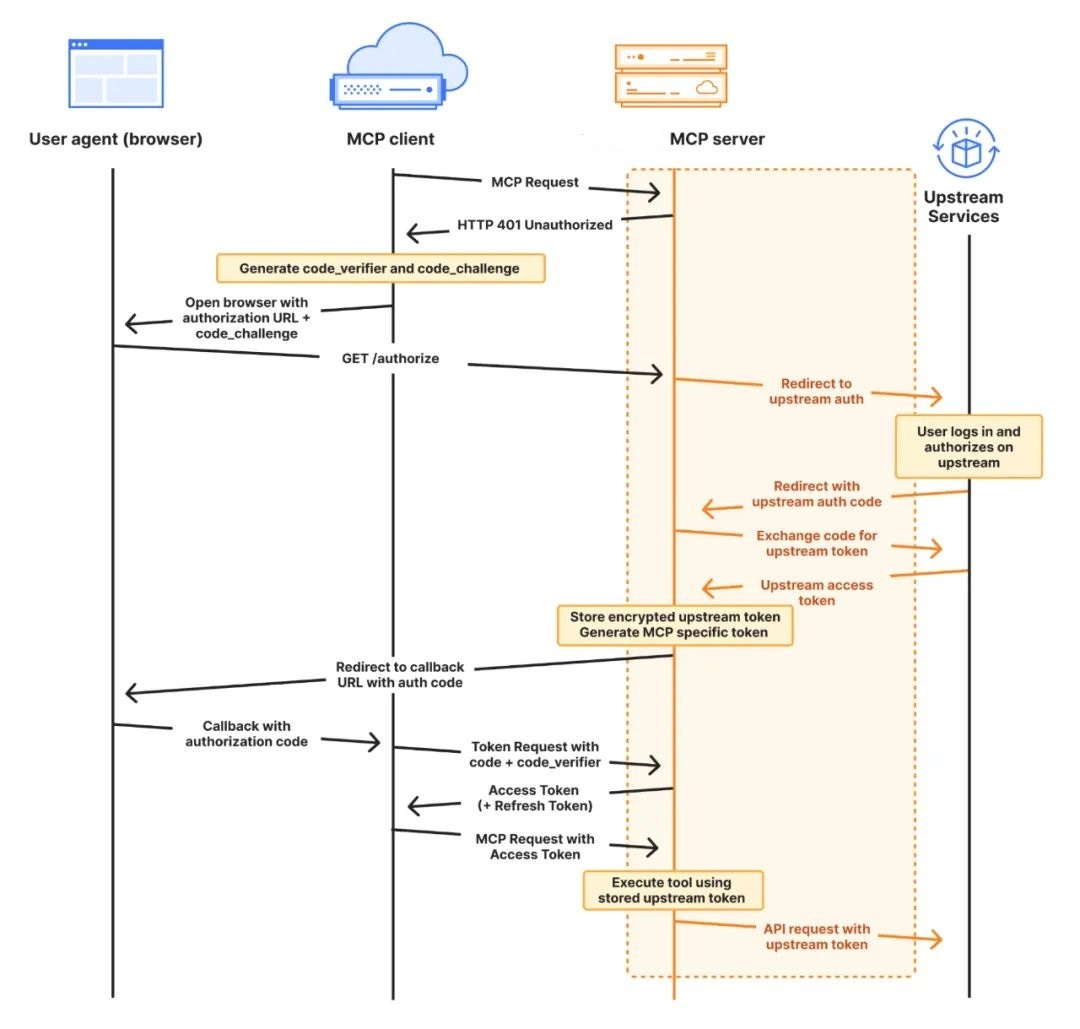

MCP follows a client-server model with clear separation of roles.

An MCP Host (the AI application or agent) connects via an MCP Client library to one or more MCP Servers.

Each server exposes a specific set of capabilities (such as reading files, querying a database, or calling an API) through a standardized protocol. This is why people refer to MCP as the “USB-c port for AI applications”.

By design, this architecture introduces security boundaries: the host and servers communicate only via the MCP protocol, which means security policies can be enforced at the protocol layer. For example,

An MCP server can restrict which files or database entries it will return, regardless of what the AI model requests.

Likewise, the host can decide which servers to trust and connect to.

This clear delineation of components makes it easier to apply the Zero Trust principle (treating each component and request as potentially untrusted until verified).

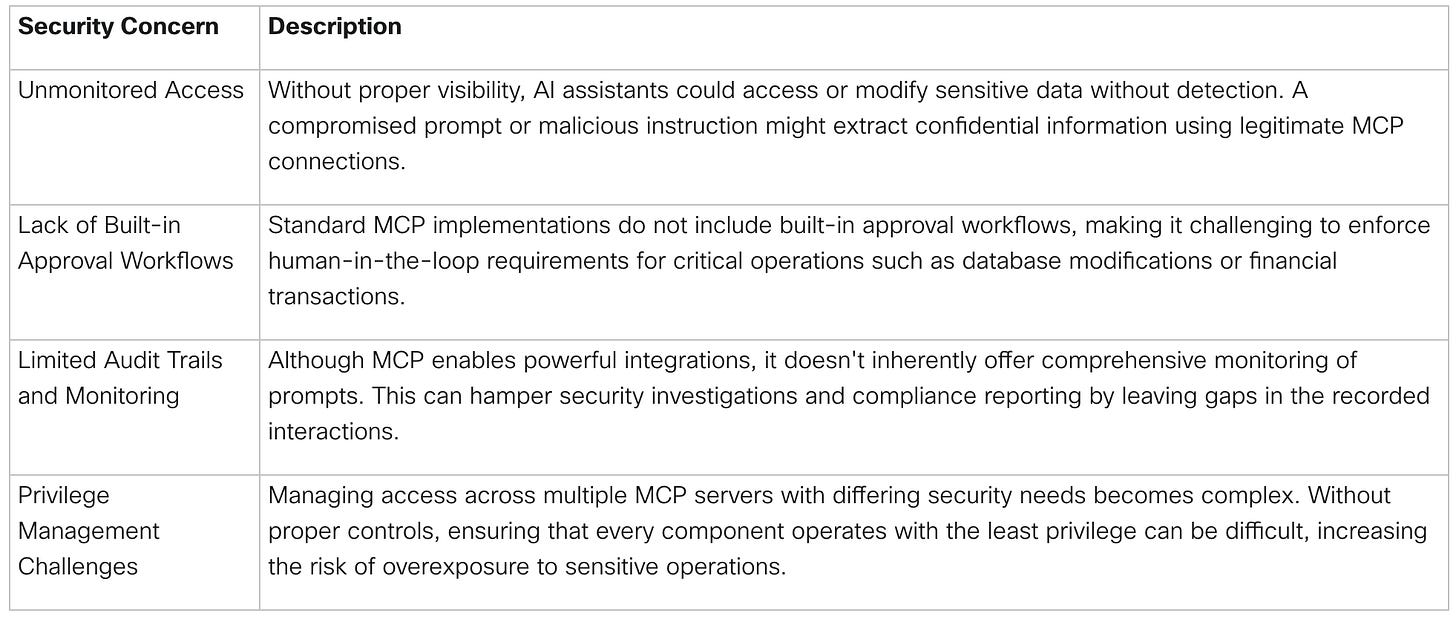

Security Considerations when Using MCP

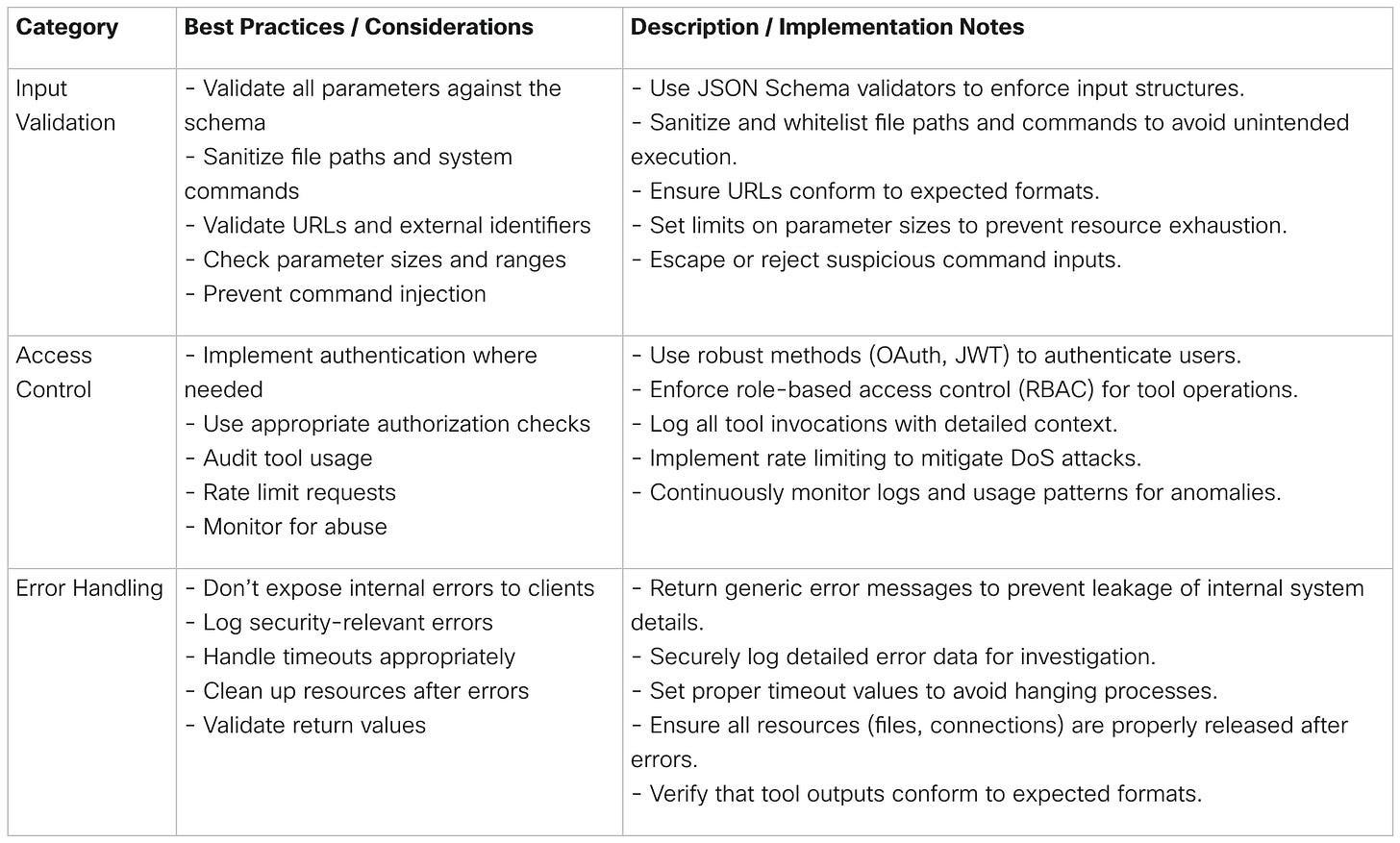

The following table outlines a few security when implementing MCP.

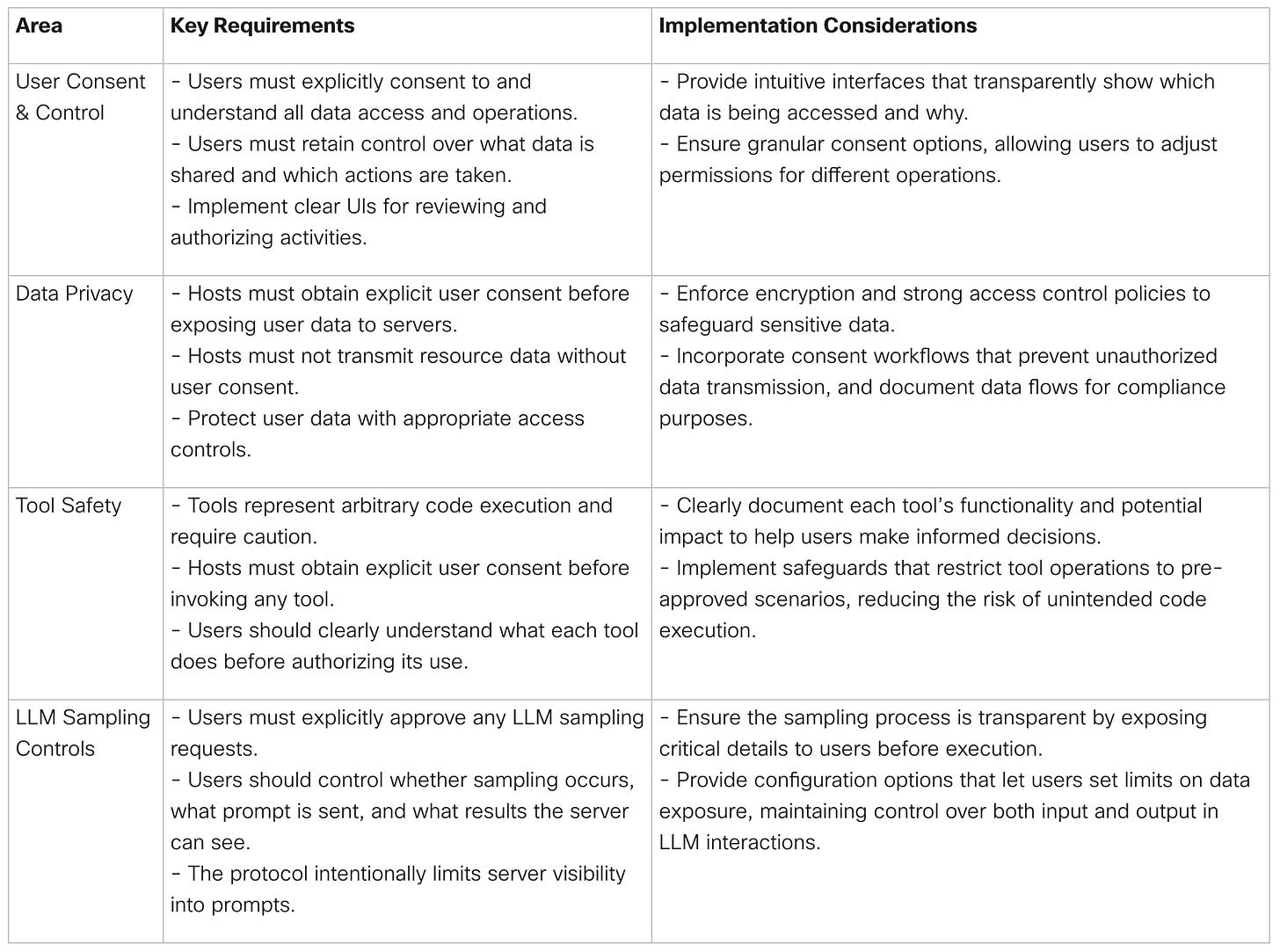

The following table lists additional security concerns and key requirements for implementing MCP.

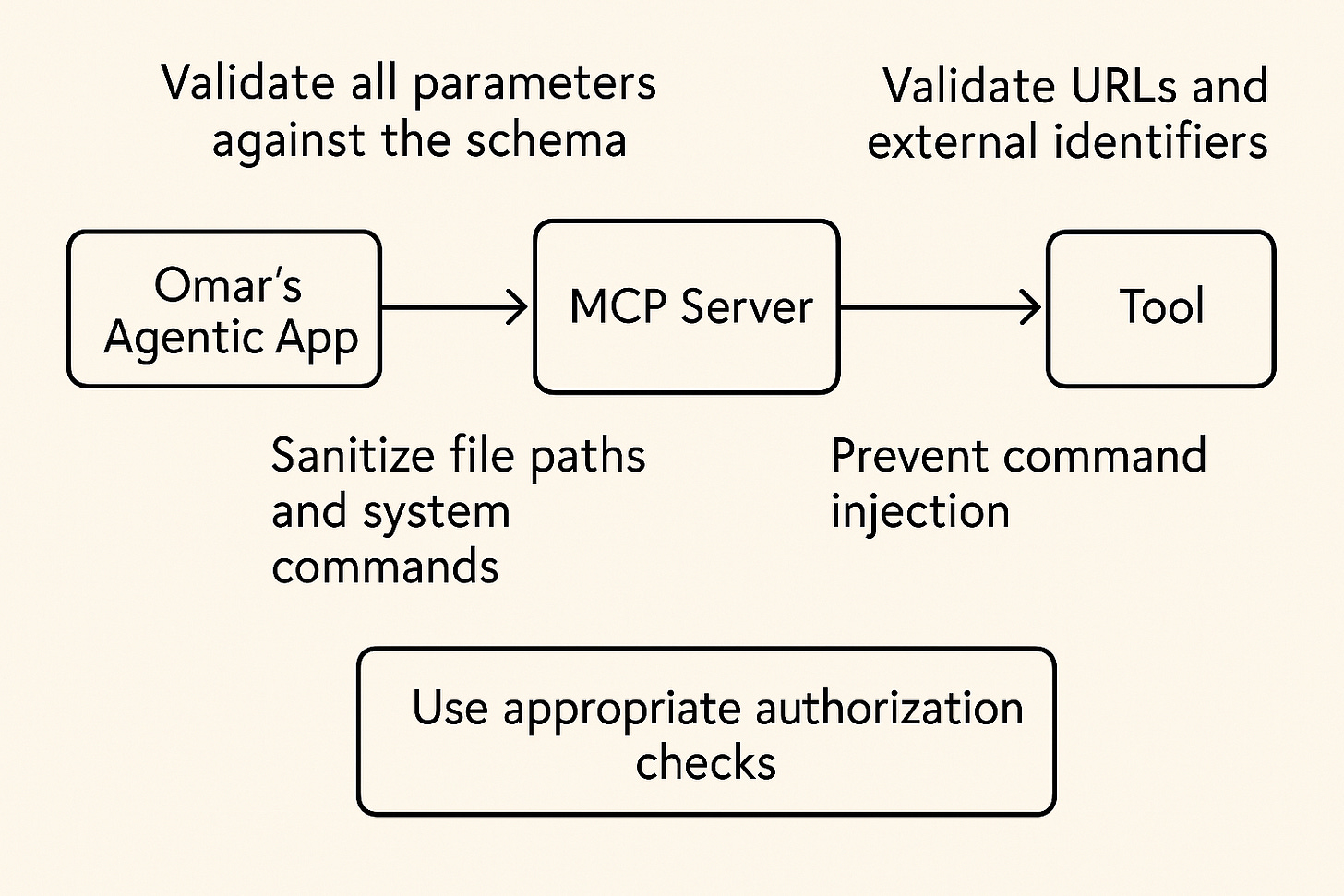

Security Considerations when Exposing Tools

When exposing tools, it's very important to implement robust security measures to safeguard against malicious inputs, unauthorized access, and other potential AI application MCP-related vulnerabilities.

The following table shows the best practices, considerations, and implementation notes.

Monitoring Agentic Implementations

TrustAI adopts a consistent and extensible approach for transmitting structured log data between AI services and monitoring systems. Our logging architecture allows developers to define verbosity thresholds on the client side, while AI applications emit standardized log entries that include severity levels, optional module identifiers, and rich, JSON-serializable context.

Yet in AI-native ecosystems where intelligent agents actively read from, write to, or infer over sensitive data, simple structured logging is not enough. TrustAI Auditable Intelligence ensures end-to-end transparency, embedding high-fidelity traceability and tamper-evident records into each AI-driven transaction. Going far beyond conventional logging protocols, TrustAI continuously verifies agent behaviors against policy baselines and provides advanced anomaly detection—empowering organizations to deploy AI with confidence, accountability, and regulatory compliance.

Learn how TrustAI makes observability verifiable and trust provable [here].